Introduction

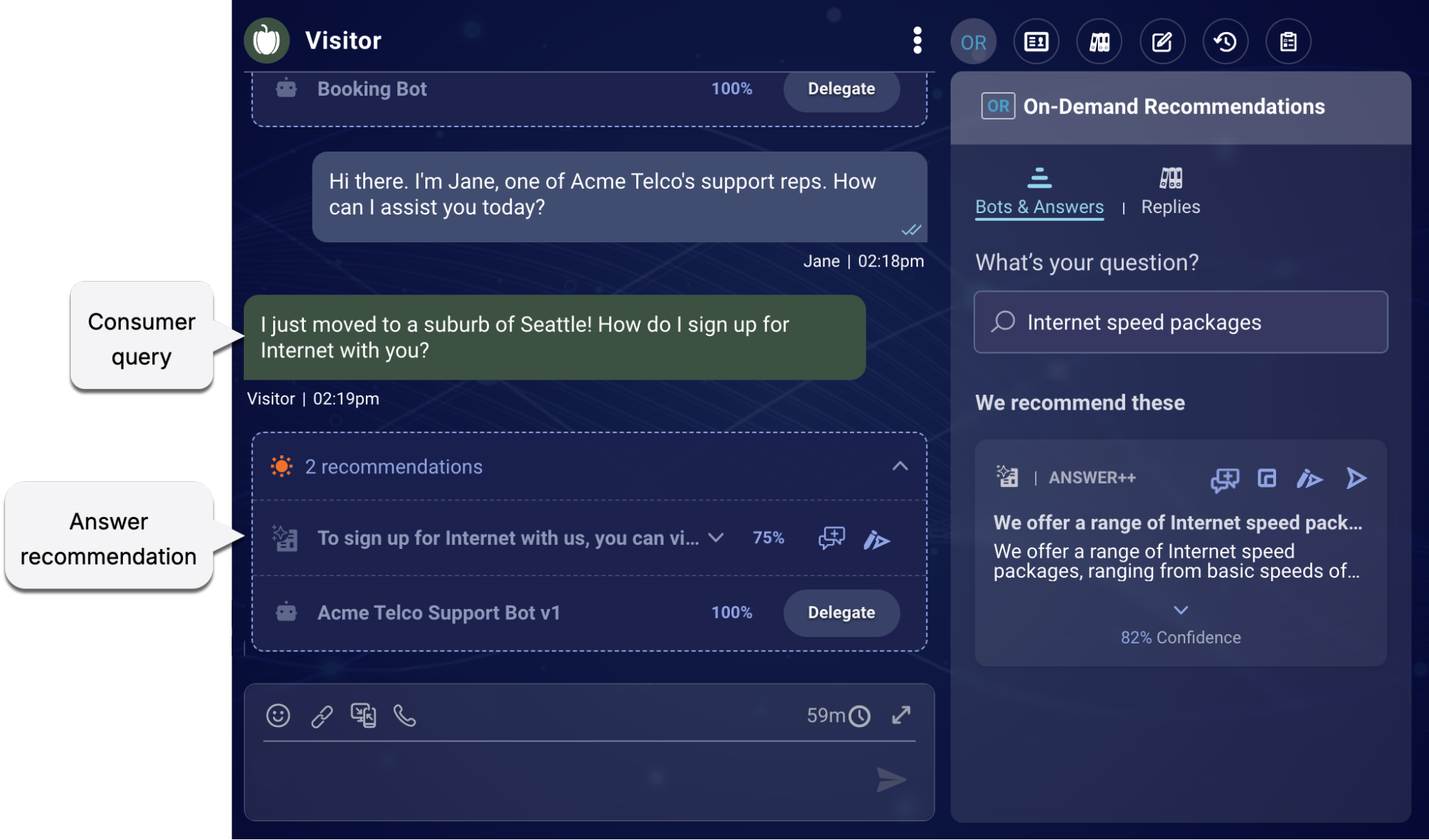

Answer recommendations are relevant responses that agents can use to resolve consumer queries. They're drawn from the knowledge base that contains all of your knowledge content. They can even be enriched via Generative AI to make them warm and contextually aware.

When answer recommendations are used, conversational outcomes are more consistent and more efficient. Agent productivity is thereby improved, as are overall operational metrics for the contact center.

This topic discusses important concepts related to answer recommendations.

Videos

Watch our videos on:

- Processing the user's query for better search

- Processing the answers for better results

Both of these concepts are introduced in this article.

User queries: contextualize

Due to the dynamic, evolving, and efficient nature of language, often the consumer’s query doesn’t include enough context to retrieve a high-quality answer from a knowledge base. Consider a conversation about the iPhone 15 Pro, with a final query from the consumer of, “How much is it?” Using this query to search a knowledge base for an answer isn’t likely to find a great answer.

But Conversation Assist has an elegant, AI-powered answer to this: Before performing the knowledge base search, you can pass the consumer’s query—along with some conversation context—to a LivePerson small language model so that the model can enhance (rephrase) it. This can significantly improve the quality (relevancy and accuracy) of the answer recommendations that are offered to your agents.

Turn on this behavior in the knowledge base rule. (Currently, it’s not available in bot rules.)

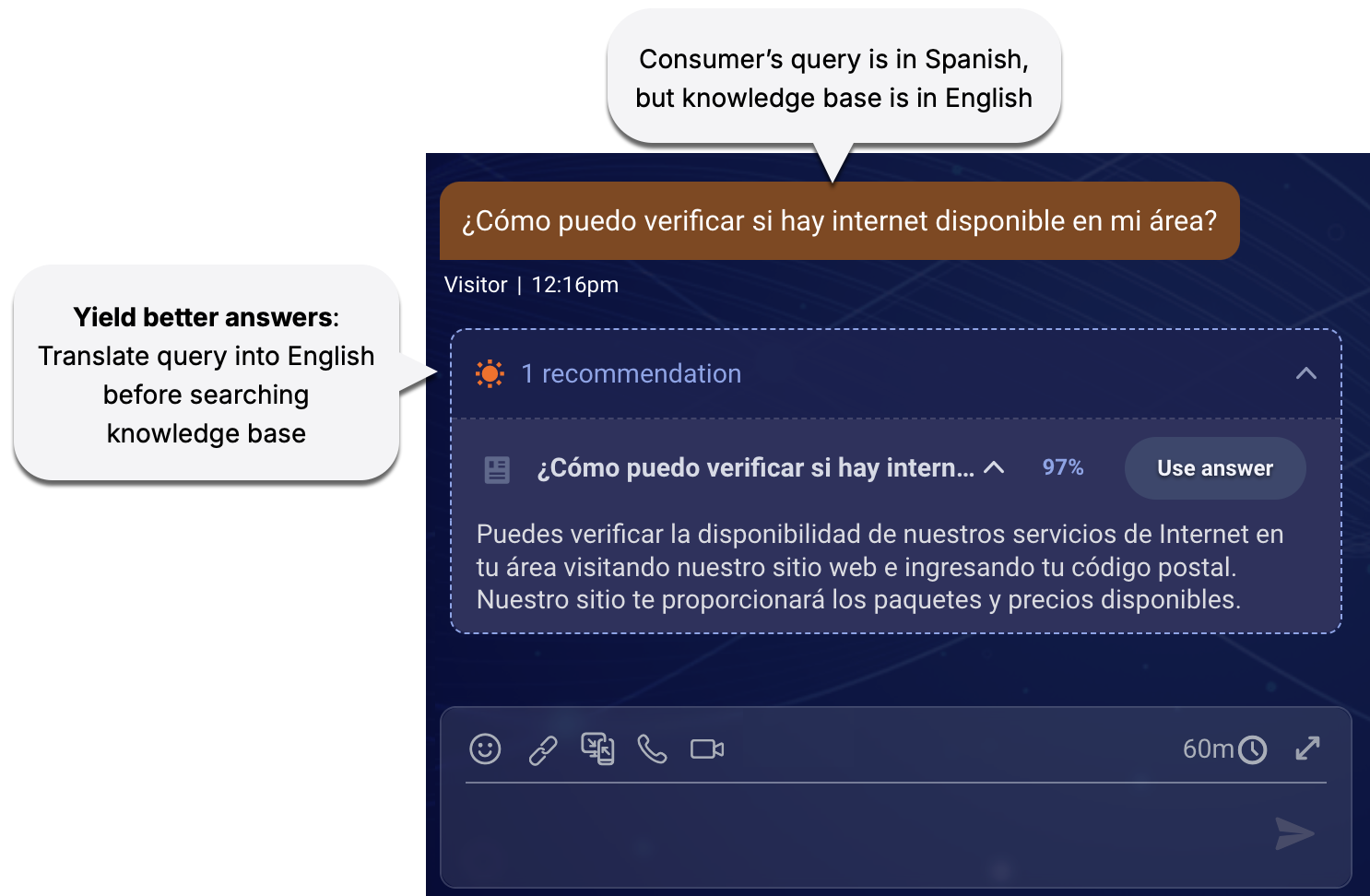

User queries: custom process via Generative AI

You can use an LLM to perform any kind of custom processing of the user’s query before performing the knowledge base search for answers. For example, if you’re supporting cross-lingual queries (say the query is in Spanish, but your knowledge base is in English), you might want to translate the query into the language of the knowledge base that will be searched for answers. Doing so can improve the search results significantly.

Benefits:

- Boost the accuracy and relevancy of answers.

- Support a global reach with multi-lingual capabilities.

Related info:

- Learn about related KnowledgeAI guidelines.

- Learn how to set up custom processing in a knowledge base rule in Conversation Assist.

Answers: enrich via Generative AI

If you’re using Conversation Assist to offer answer recommendations to your agents, you can offer ones that are enriched by KnowledgeAI's LLM-powered answer enrichment service. The resulting answers, formulated via Generative AI, are accurate, contextually aware, and natural-sounding.

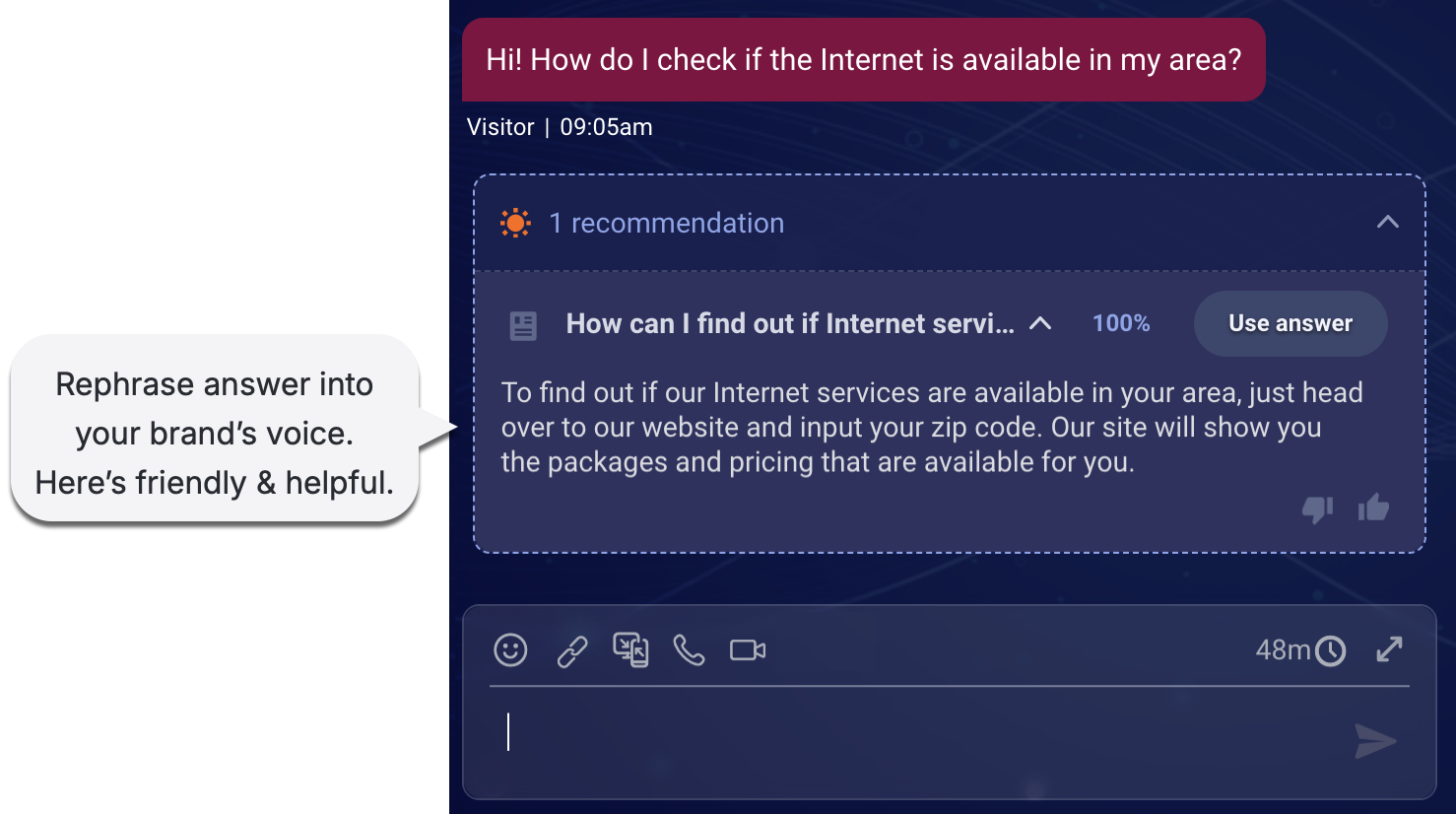

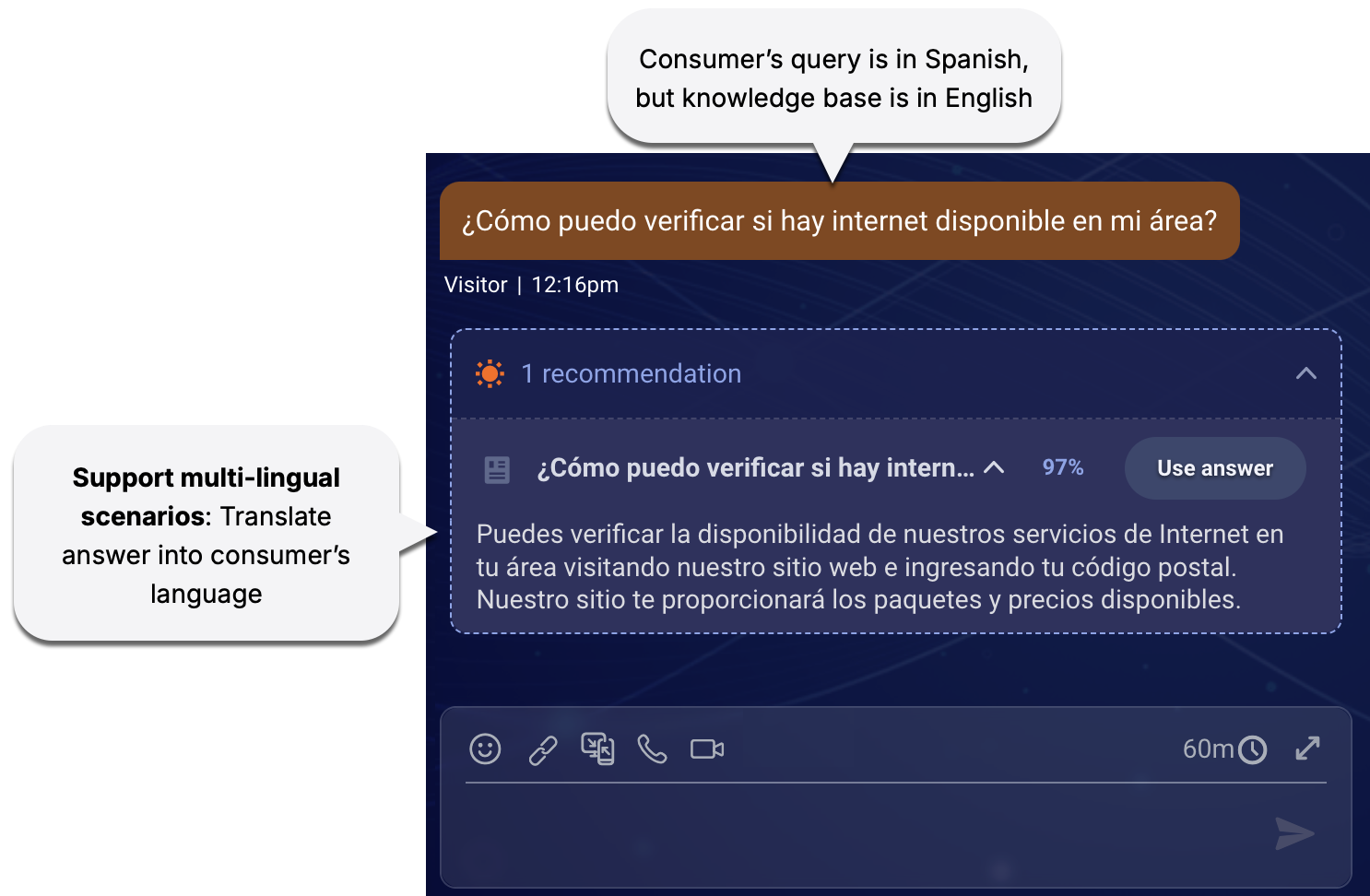

Answers: custom process via Generative AI

You can use an LLM to perform any kind of custom processing of the answers returned by KnowledgeAI, before returning those answers to Conversation Assist.

A popular use case for this is to rephrase the answers so that they better conform to your brand’s voice.

Or, you might need to translate the answer into the consumer’s language.

Benefits:

- Promote smarter conversations via optimized answers.

- Ensure brand compliance by aligning communications with your brand’s voice.

- Support a global reach with multi-lingual capabilities.

- Save agents time and reduce manual edits.

Related info:

- Learn about related KnowledgeAI guidelines.

- Learn how to set up custom processing in a knowledge base rule in Conversation Assist.

Summary or detail?

An article (answer) in a knowledge base in KnowledgeAI™ has two primary fields for content: Summary and Detail. Confused about which one is used to offer answer recommendations to agents? Learn the basic concepts about Summary and Detail.

Also see our best practices for using Summary and Detail.

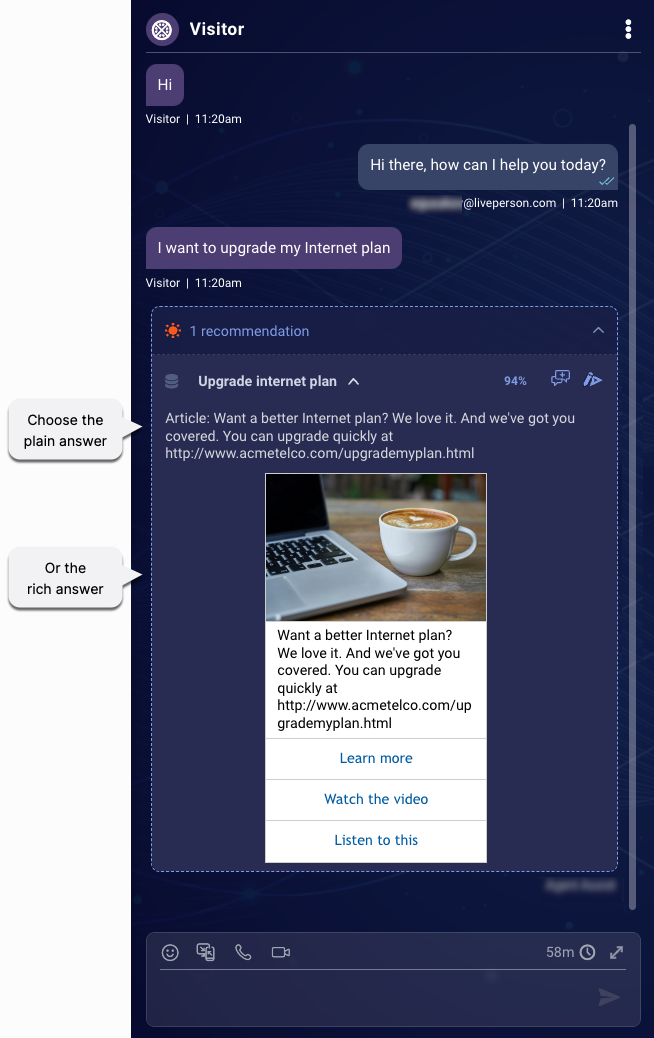

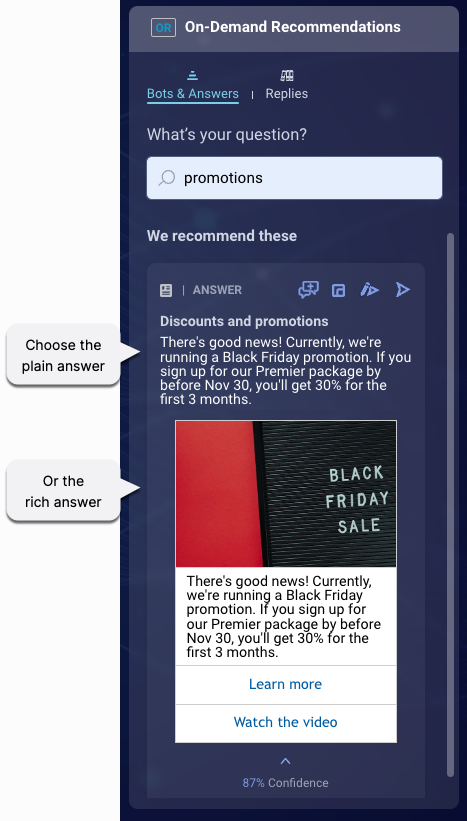

Rich or plain?

Currently, rich answer recommendations are supported only on the Web and Mobile SDK channels.

When offering your agents answer recommendations, you want them to be relevant. But you also want them to be engaging, right? We agree.

So, when it comes to offering answer recommendations, you have options: You can offer plain text answers. Or, you can offer both rich and plain answers, and let your agents choose which type to send within the conversation. Here below, we’ve done the latter.

Recommended answer offered in a conversation

Recommended answer offered on demand

Considering supporting rich answers. Their multimedia nature makes them much more engaging than plain answers, leading to a best-in-class experience for the consumer. Learn more.