Not ready to make use of Generative AI and LLMs? No problem. You don't need to incorporate these technologies into your solution. Learn how to automate answers without Generative AI.

What's a KnowledgeAI agent?

If you’re using LivePerson Conversation Builder bots to automate answers to consumers, you can send answers that are enriched by KnowledgeAI™'s LLM-powered answer enrichment service. We call bots like these KnowledgeAI agents. The resulting answers, formulated via Generative AI, are:

- Grounded in knowledge base content

- Contextually aware

- Natural-sounding

Key benefits

- Maximize your existing content investments by seamlessly integrating your existing knowledge bases, systems, and documents to answer frequently asked questions with a robust retrieval-augmented generation system.

- Boost customer satisfaction by utilizing your brand’s knowledge to enable natural, context-aware interactions for a seamless customer experience.

- Ensure safety by reducing hallucinations with our proprietary Hallucination Detection model, ensuring that generated responses are more accurate and consistent with your business’s underlying data.

Language support

Before you start

When you use enriched answers, it’s important to be aware of the potential for hallucinations. This is especially important when you use them in automated conversations with bots. Unlike with Conversation Assist, there is no agent in the middle as a safeguard.

If you use enriched answers in consumer-facing bots, you accept this risk of hallucinations and the liability that it poses to your brand, as outlined in your legal agreement with LivePerson.

Follow our best practices discussed later in this article: Test things out with internal bots and in Conversation Assist first.

Get started

- Learn about KnowledgeAI's answer enrichment service.

- Activate this Generative AI feature.

- Turn on enriched answers in one or more interactions in the bot. Select the prompt(s) to use. Configure the integration point.

- Test the consumer experience.

Turn on enriched answers

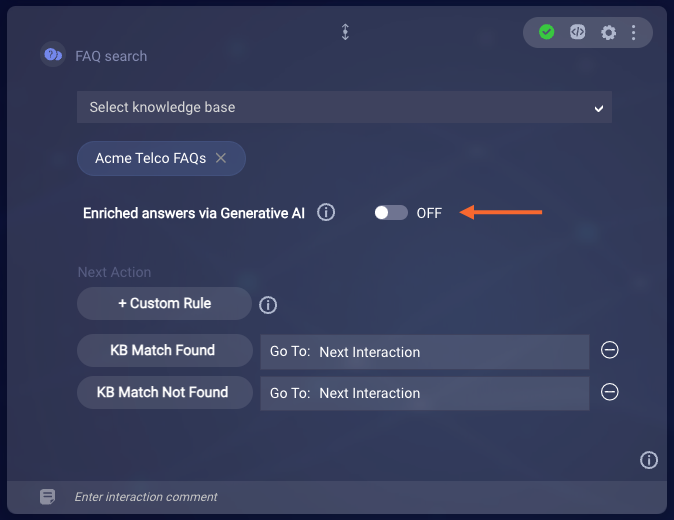

In a bot, you might want to use enriched answers in some interactions but not others. This flexibility is possible to realize because the applicable setting is at the interaction level. Turn it on for some interactions but not others, or turn it on in all interactions. The choice is yours.

You'll find the Enriched answers via Generative AI setting on the face of the KnowledgeAI interaction:

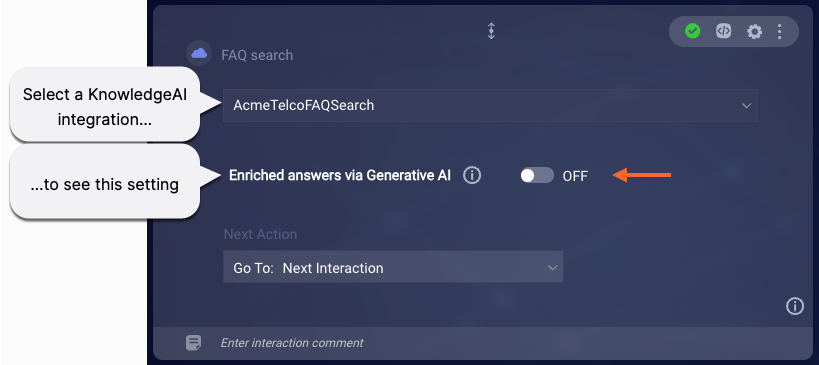

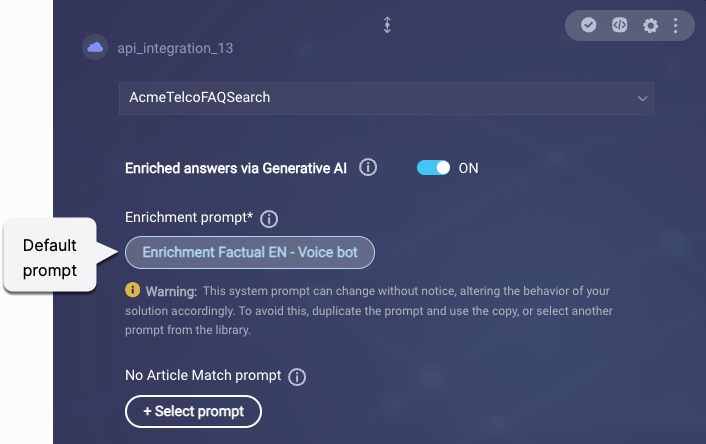

Alternatively, in the Integration interaction, display of the setting is dynamic. You’ll find it on the face of the interaction once you select a KnowledgeAI integration in the interaction. (Typically, you use the Integration interaction in Voice bots.)

Default prompts

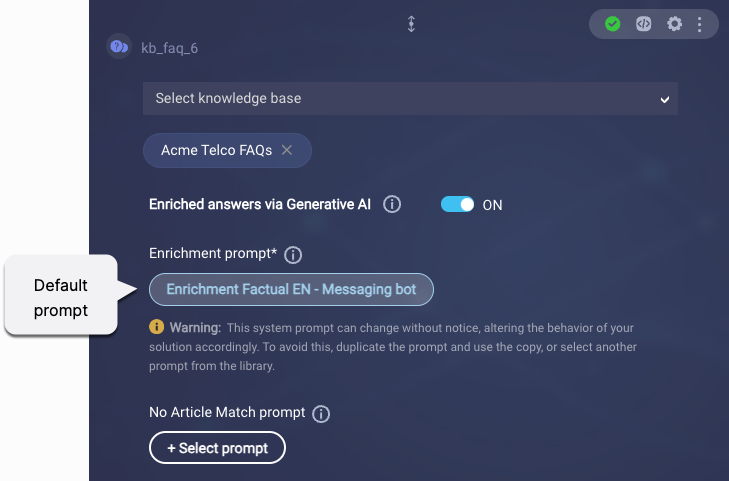

To get you up and running quickly, Generative AI integration points in bots make use of default Enrichment prompts. An Enrichment prompt is required, and we don't want you to have to spend time on selecting one when you're just getting started and exploring.

Here's the default prompt for messaging bots:

And here's the default prompt for voice bots:

Use the default prompts for a short time during exploration. But be aware that LivePerson can change these without notice, altering the behavior of your solution accordingly. To avoid this, at your earliest convenience, duplicate the prompt and use the copy, or select another prompt from the Prompt Library.

You can learn more about the default prompts by reviewing their descriptions in the Prompt Library.

Select a prompt

The process of selecting or changing a prompt is the same for the Enrichment prompt (required) and No Article Match prompt (optional).

- In the interaction, select the existing prompt to open the Prompt Library.

-

Do one of the following:

- If you're currently using the default system prompt, click Select from library in the lower-right corner.

- If you're using one of your own prompts, click Go to library in the lower-left corner.

By default, your prompts are listed in the Select Prompt window.

- In My Prompts, select the prompt that you want to use.

- Click Select.

Since you're accessing the Prompt Library from Conversation Builder, the system filters the available prompts to just those of an appropriate Client type. For example, if you have a messaging bot open, then you'll see only your prompts for messaging bots.

When you're in the Prompt Library selecting a prompt, you can also create, edit, and copy prompts on the fly.

Configure the KnowledgeAI integration

There are two ways to integrate KnowledgeAI answers into a bot:

- Knowledge AI interaction: Recommended for Messaging bots due to its power and simplicity.

- Integration interaction that uses a KnowledgeAI integration: Required by Voice bots.

Using the Knowledge AI interaction

When using the Auto Render, Plain answer layout:

- Use the Max number of answers interaction setting to specify how many matched articles to retrieve from the knowledge base and send to the LLM-powered service to generate a single, enriched answer.

- The interaction always sends just a single, plain-text answer (enriched by the LLM) to the consumer.

Take care when using the Auto Render, Rich answer layout: In this context, the Max number of answers interaction setting has two purposes:

- Determines how many matched articles to retrieve from the knowledge base and send to the LLM-powered service to generate a single, enriched answer

- Determines how many answers to send to the consumer.

If you set Max number of answers to 1, only the top scoring article is used by the LLM service to generate the enriched answer (purpose 1), and only that enriched answer is sent to the consumer (purpose 2). The enriched answer is always considered the top answer match, i.e., the article at index 0.

However, if you set Max number of answers to a higher number, keep in mind that the setting has two purposes. If there's more than one article match, multiple articles will be used by the LLM service to generate the single, enriched answer (purpose 1). But this also means that the consumer is sent multiple answers, i.e., the enriched answer plus some unenriched answers too (purpose 2).

It can be advantageous to specify a number higher than 1 because more knowledge coverage is provided to the LLM service when generating the enriched answer. As a result, the enriched answer is often better than when it's generated using just a single article.

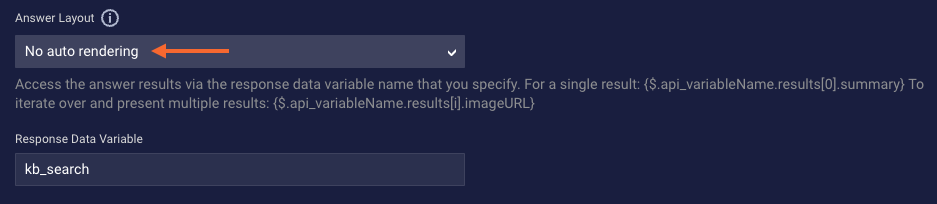

That said, if you do specify a number higher than 1, it's likely you don't want to send to the consumer unenriched answers along with the enriched one. If this is your case, keep the value higher than 1, and use the No Auto Rendering (custom) answer layout to send only the enriched answer to the consumer.

You can display just the enriched answer with:

{$.api_variableName.results[0].summary}

Important notes

-

variableNameis the response data variable name that you specified in the Knowledge AI interaction's settings. - The enriched answer is always considered the top answer match, i.e., the article at index 0. And the content is returned in the

Summaryfield.

Use of the Auto Render, Rich answer layout is supported, but it isn’t recommended. This is because the generated answer from the LLM might not always align well substantively with the image/content URL associated with the highest-scoring article, which are what are used.

Using the KnowledgeAI integration

When using this integration, things are a little more straightforward because this is a more manual approach. There’s less that is automated.

In the integration, the multipleResults setting determines only how many articles to try to retrieve from the knowledge base and send to the LLM-powered service to generate a single, enriched answer.

You can set multipleResults to 1. Or you can set it to a higher number. It can be advantageous to specify a higher number because more knowledge coverage is provided to the LLM service when generating the enriched answer. As a result, the enriched answer is often better than when it's generated using just a single article.

Whatever your choice, sending the enriched answer to the consumer is a manual implementation step via one or more interactions. Thus, you have control to ensure that only the enriched answer is sent.

The enriched answer is always considered the top answer match, i.e., the article at index 0. And the content is returned in the Summary field.

Learn more about how to use this integration in a Voice bot.

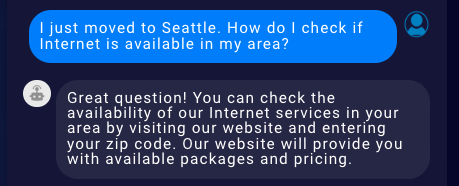

Consumer experience

Hallucination handling

Hallucinations in LLM-generated responses happen from time to time, so a Generative AI solution that’s trustworthy requires smart and efficient ways to handle them.

When returning answers to Conversation Builder bots, by default, KnowledgeAI takes advantage of our LLM Gateway's ability to rephrase responses to exclude hallucinated URLs, phone numbers, and email addresses. This is so bots don't send messages containing these types of hallucinations.

Best practices

Internal bots

Request feedback from your users.

All bots

Always provide pathways (dialog flows) to ensure the user’s query can be resolved.

For example, assume you have a KnowledgeAI integration in the bot’s Fallback dialog, to “catch” and try to handle the queries that aren’t handled by the bot’s regular business dialogs. This is a common scenario. Further assume that this Fallback dialog transfers the conversation to an agent if no answers are returned. In this case, we don’t recommend that you ask us to turn on the feature that calls the enrichment service even when no article matches are found. Why? Because the bot would always provide some kind of response to the consumer. And the transfer to the agent would never happen.

More best practices

See our KnowledgeAI best practices on using our enrichment service.

Reporting

KnowledgeAI's Consumer Queries feature offers a report on query-and-answer information. It contains the consumer's query, the matched article (if any), how the article was matched, the confidence score, and more.

You can also use the Generative AI Dashboard in Conversational Cloud's Report Center to make data-driven decisions that improve the effectiveness of your Generative AI solution.

The dashboard helps you to answer important questions, such as: How is Generative AI performing in my solution? To what extent is Generative AI helping my agents and bots?

The dashboard draws conversational data from all channels across Voice and Messaging, producing actionable insights that can drive business growth and improve consumer engagement.

Access Report Center by clicking Optimize > Manage on the left-hand navigation bar.

FAQs

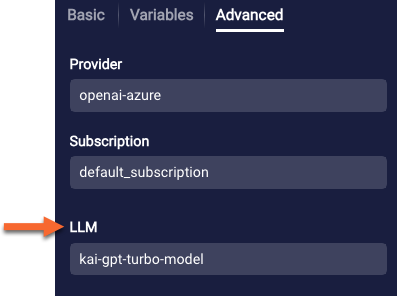

What LLM is used?

To learn which LLM is used by default, open the prompt in the Prompt Library, and check the prompt's Advanced settings.

Can I use your LLM-powered features to take action on behalf of consumers?

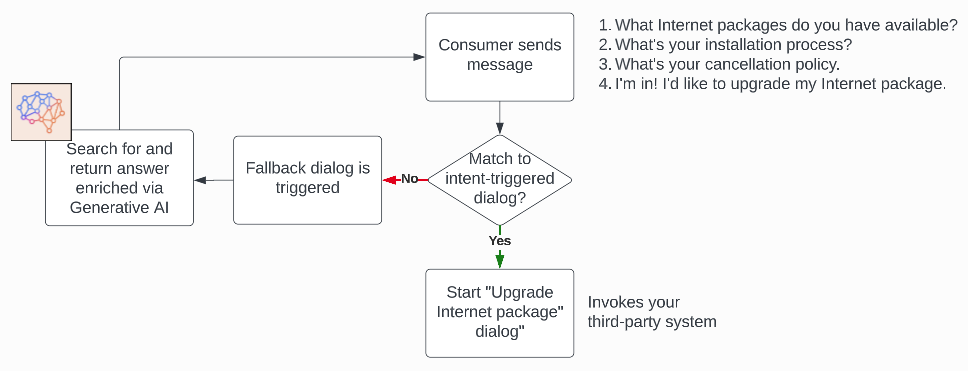

Currently, there aren’t any LLM-powered features that trigger actions, i.e., the business-oriented dialogs in your bot. However, a common design pattern is to implement an LLM-powered KnowledgeAI integration in the bot’s Fallback dialog. This takes care of warmly and gracefully handling all consumer messages that the bot can’t handle. Once the consumer says something that triggers an intent-driven dialog, that dialog flow begins immediately.

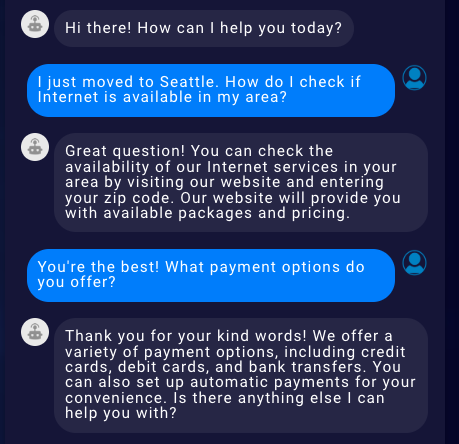

As an example, the flow below illustrates a typical conversation between a Telco bot and a consumer inquiring about Internet packages. The consumer’s first 3 messages are handled by the Fallback dialog, but the 4th triggers the business dialog that takes action.

The great thing about this design pattern is that it works very well with our context switching feature. Once the business dialog begins, it remains “in play” until it’s completed. So, if the consumer suddenly interjects another question that the bot can’t handle — like, “Wait, can I bundle Internet and TV together?” — the flow will move to the Fallback dialog for another LLM-powered, enriched answer. And then, importantly, it will return automatically to the business dialog after sending that answer.

The other great thing about this design pattern is that it works very well with bot groups too. That is, if the consumer’s message matches a dialog in a bot within the same bot group, that other bot’s dialog is immediately triggered. The first bot’s Fallback dialog is never triggered for an enriched answer. Instead, the consumer is taken right into the action-oriented business flow.

I’m getting different results in Conversation Builder’s Preview tool versus KnowledgeAI’s Answer Tester. Is this expected?

Semantically speaking, you should get the same answer to the same question when the context is the same. However, don’t expect the exact wording to be the same every time. That’s the nature of the Generative AI at work creating unique content.

Also, keep in mind that when using KnowledgeAI's Answer Tester, no conversation context is passed to the LLM service that does the answer enrichment. So, the results will be slightly different.

Conversation context is only passed when using:

- Preview

- Conversation Tester

- A deployed bot

The added conversation context can yield better results.

Troubleshooting

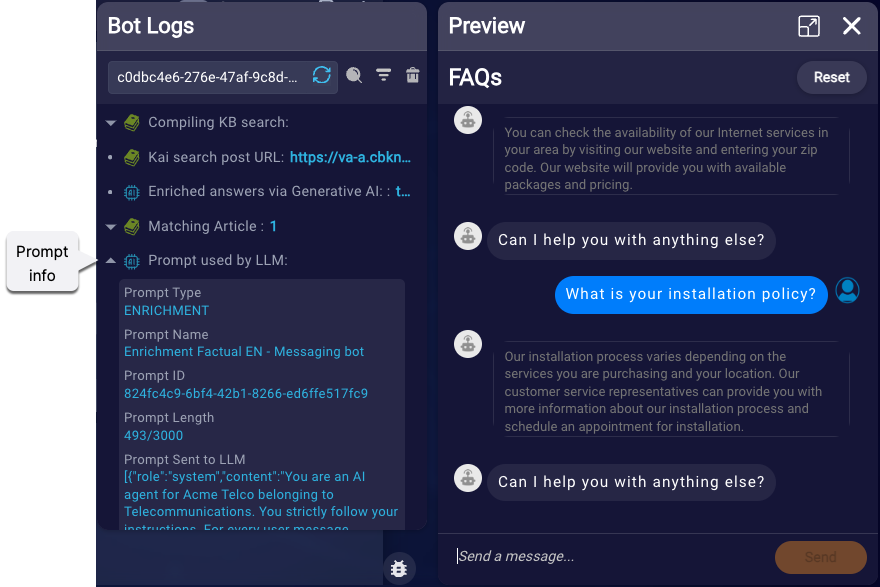

What debugging tools are available?

You can use both Bot Logs and Conversation Tester within Conversation Builder to debug your Generative AI solution. Both tools provide detailed info on the article matching and the prompt used. Here's an example from Bot Logs:

My consumers aren’t being sent answers as I expect. What can I do?

In the interaction or integration (depending on the implementation approach you're using), try adjusting the Min Confidence Score for Answers (a.k.a. threshold) setting. But also see the KnowledgeAI discussion on confidence thresholds.

Related articles

- Enriched Answers via Generative AI (KnowledgeAI)

- Prompt Library Overview

- Offer Enriched Answers via Generative AI (Conversation Assist)

- Trustworthy Generative AI for the Enterprise

- Bring Your Own LLM