Introduction

You want the answers sent to your consumers to be optimized to align with both your brand's objectives and the specific needs of each consumer. Custom processing powered by Generative AI is a vital tool in achieving this, enabling tailored responses that enhance the consumer experience and strengthen brand engagement.

Custom processing of the answers refines them before they’re returned to the client application that requested them. This can ensure the answers align with your brand’s voice, are in the right language, and more.

You can perform any kind of processing of the answers that you require. Use an LLM made available via LivePerson or use your own.

Use cases

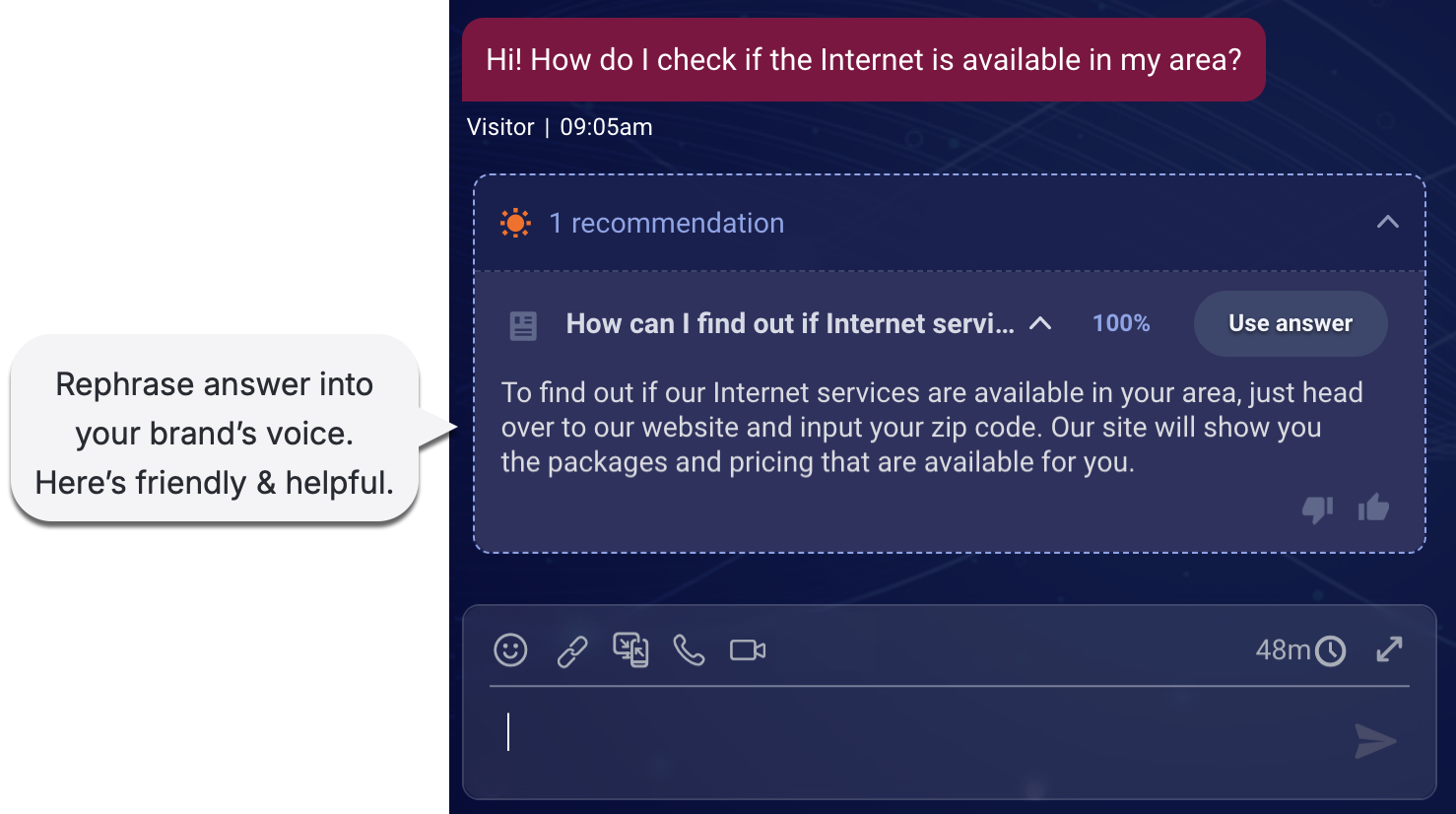

A popular use case for custom processing of the answers is to rephrase the answers so that they better conform to your brand’s voice.

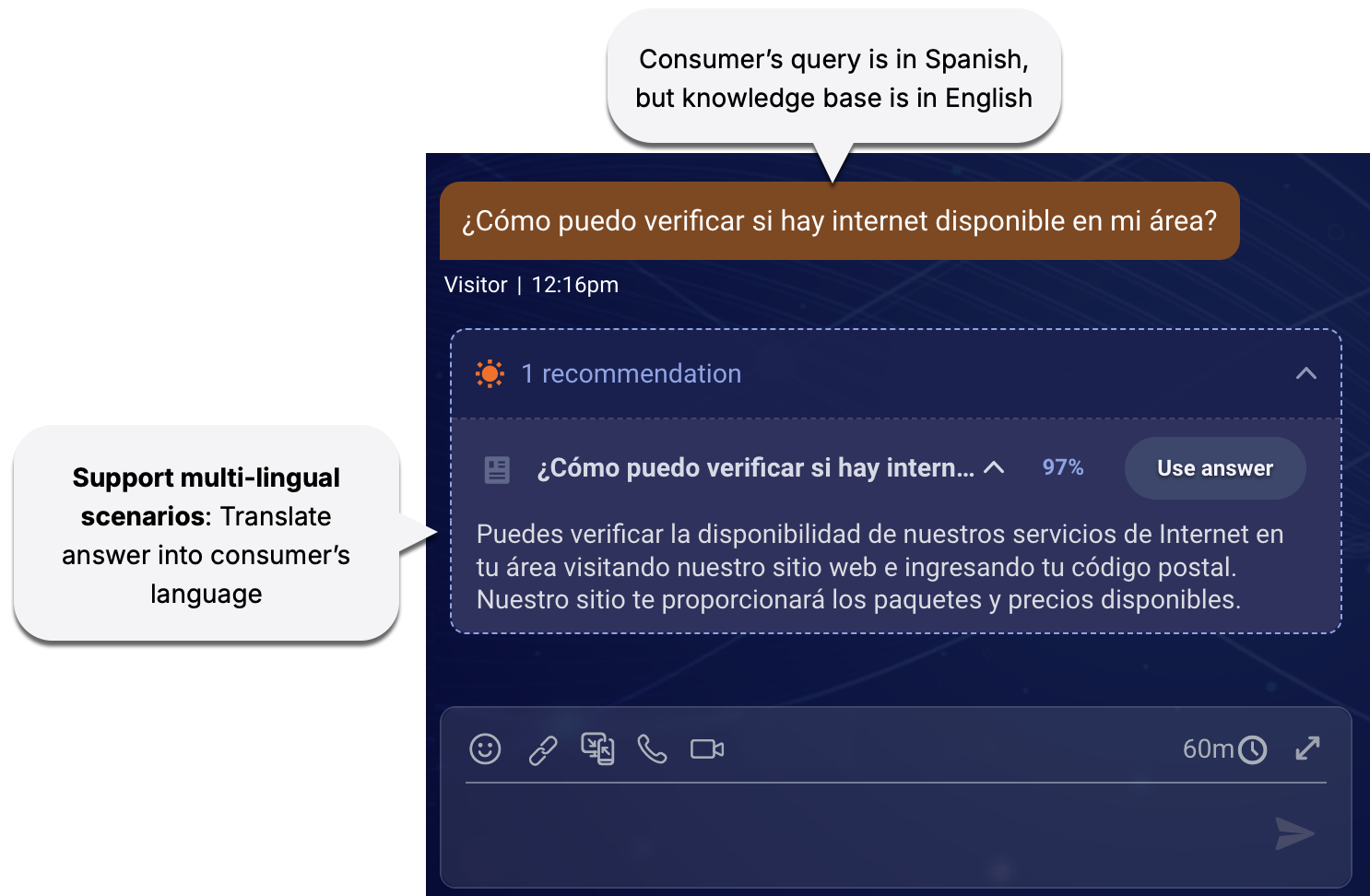

Or, if you’re supporting cross-lingual queries (say the query is in Spanish, but your knowledge base is in English), you might want to translate the answers into the consumer’s language.

Benefits

- Promote smarter conversations via optimized answers.

- Ensure brand compliance by aligning communications with your brand’s voice.

- Support a global reach with multi-lingual capabilities.

- Save agents time and reduce manual edits.

Applications using this

Currently, custom processing of the answers via Generative AI is only supported by Conversation Assist. Learn how to turn this on in a knowledge base rule within Conversation Assist.

How it works

Before KnowledgeAI returns the answers to the client application that requested them, the answers are processed using an LLM. The output from the LLM must be a JSON that contains a list of answers; instruction on this must be provided in the prompt. (Check out the prompts provided by LivePerson for more understanding.)

Learn where custom processing of answers fits into the overall search flow.

If a failure occurs during the LLM’s custom processing of the answers, or if the required output (a JSON list of answers) is not returned by the LLM, then KnowledgeAI will return the unprocessed answers to the client application. That is, it will return the answers that were sent to the LLM for processing. A failure might occur for a few reasons:

- The LLM is unavailable.

- The LLM doesn’t return the list of answers in JSON format.

- Etc.

Testers will be able to see such failures in KnowledgeAI’s Answer Tester, but no error is returned to the client application that made the request for answers. This is by design so that answers are returned even if they’re unprocessed for refinement.

Prompt templates

LivePerson makes available the following related prompt templates in the Prompt Library:

- Answer Translation - Conversation Assist (Messaging)

- Answer Translation - Conversation Assist (Voice)

Prompt customization

Customizing the prompt? Learn about the variables used in custom processing of answers from KnowlegeAI.

As mentioned above, the output from the LLM must be a JSON that contains a list of answers, so don’t change the instructions in the prompt regarding this; they’ve been tested by LivePerson.