Prompts

In the context of Large Language Models (LLMs), the prompt is the text or input that is given to the model in order to receive generated text as output. Prompts can be a single sentence, a paragraph, or even a series of instructions or examples.

The LLM uses the provided prompt to understand the context and generate a coherent and relevant response. The quality and relevance of the generated response depend on the clarity of the instructions and how well the prompt conveys your intent.

The interaction between the prompt and the model is a key factor in determining the accuracy, style, and tone of the generated output. It's crucial to formulate prompts effectively to elicit the desired type of response from the model. See our best practices for writing prompt instructions.

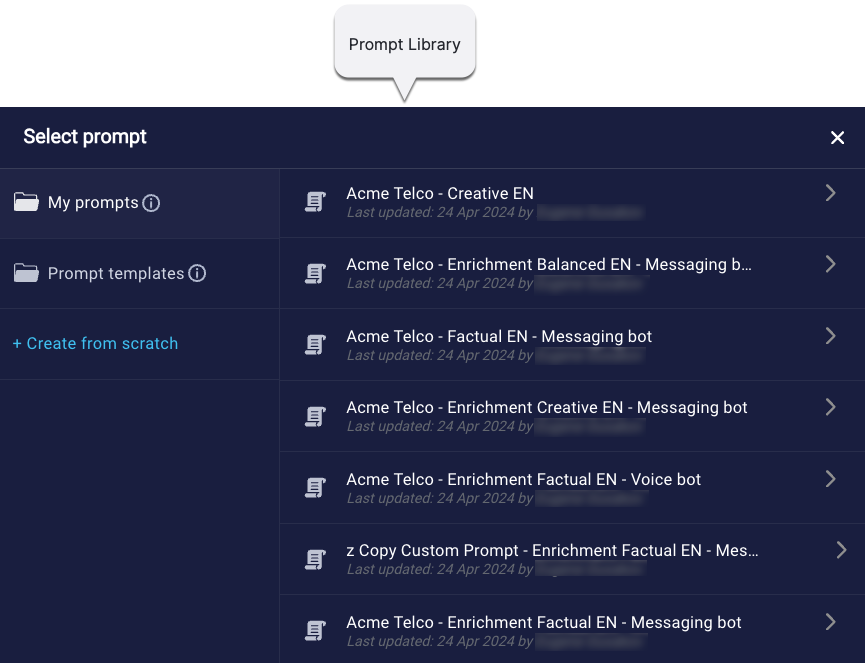

Prompt Library

The Prompt Library is the user interface that you use to select and manage prompts.

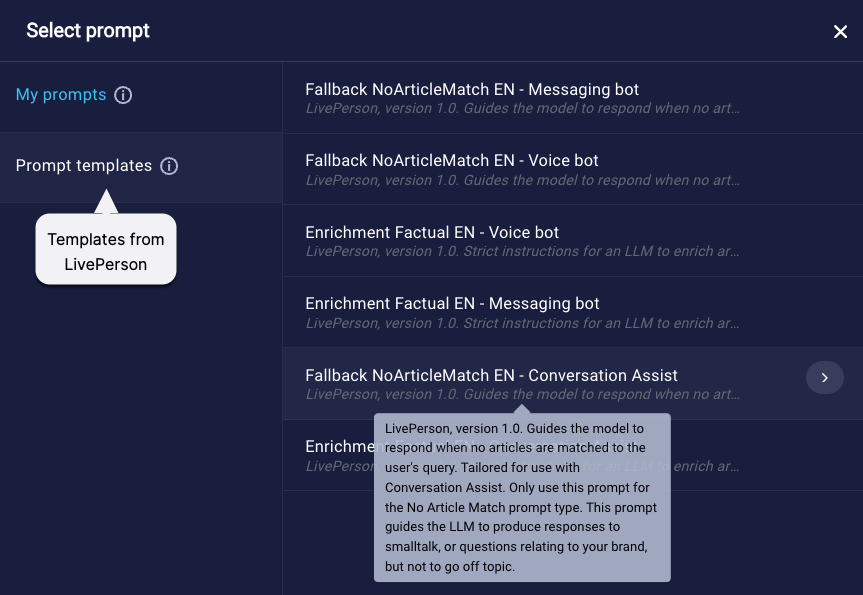

Prompt templates

Prompt templates are essentially prompts that are created, tested, and maintained by LivePerson. They’re intended to get you up and running quickly, so you can explore a Generative AI solution.

Whenever you select to use a prompt template, a complete copy is made. You can customize it if desired. Once it's saved and selected, the copy is added to My Prompts in the Prompt Library. This is so that subsequent changes we make to the template don’t inadvertently affect the behavior of your solution.

Again, there’s no relationship between a prompt template that you’ve copied and your copy itself. Your copy is independent by design: We don’t want our updates to the prompt templates to affect your solution inadvertently.

Custom prompts

Custom prompts are prompts created by users of your account and visible only to the same. They’re tailored to suit your specific requirements. You can create custom prompts:

Released prompts

The latest version of a prompt is always the released version that’s used by the client applications: Conversation Assist, the LivePerson Conversation Builder bot, etc.

Follow our best practices for releasing prompt changes.

Tokens

LLMs handle text by segmenting it into units called tokens. Tokens represent common sequences of characters. For instance, the word "infrastructure" is dissected into "inf" and "rastructure" while a word like "bot" remains a single token.

An LLM’s context window is the total number of tokens permitted by the model. This window encompasses both the number of input tokens sent to the model and the number of generated tokens in the model’s response. The context window is about the two…combined. Every model has a fixed context window that’s based on computational constraints.

When considering input tokens plus generated tokens, for most LLMs, it’s relatively easy to stay well within the LLM’s context window. But be aware it exists.

Learn more about tokens

- https://platform.openai.com/docs/introduction/tokens

- https://help.openai.com/en/articles/4936856-what-are-tokens-and-how-to-count-them

- https://platform.openai.com/tokenizer

- https://platform.openai.com/docs/guides/text-generation/managing-tokens

Hallucinations

Hallucinations are situations where the underlying LLM service generates incorrect or nonsensical responses, or responses that aren't grounded in the contextual data or brand knowledge that was provided.

For example, suppose the consumer asks, “Tell me about your 20% rebate for veterans.” If the presupposition within that query (that such a rebate exists) is regarded as true by the LLM service, when in fact it is not true, the LLM service will hallucinate and send an incorrect response.

Be aware that all prompts have the potential for hallucinations. Typically, this happens when the model relies too heavily on its language model and fails to effectively leverage the provided source content. The degree of risk here depends on the prompt style that’s used. For example, if your solution uses answers that are enriched via Generative AI, consider these questions:

- Does the prompt direct the service to respond using only the info in the matched articles?

- Does the prompt direct the service to adhere to the script in the matched articles as much as possible?

- Does the prompt direct the service to come up with answers independently when necessary (i.e., when no relevant articles are matched), using both the info in the conversation context and in its language model?

It’s important to carefully evaluate the prompts that you create regarding questions like those above. Strike a balance between constraint and freedom that aligns with the level of risk that you accept. And always test your prompts thoroughly before using them in Production.

Conversational Cloud's LLM Gateway has a Hallucination Detection post-processing service that detects and handles hallucinations with respect to URLs, phone numbers, and email addresses.

Prompt updates by LivePerson

New prompts released by LivePerson won't impact your solution; we'll release new prompts as separate prompts that have new and unique names.