What’s a Voice bot?

A voice bot or virtual assistant is an AI program designed to communicate with humans through spoken language. Voice bots recognize both speech and the touch tones from the telephone, which are called DTMF tones. Because of this, the consumer can speak their intent (e.g., they can say, “I want my account balance”), and they can otherwise interact with the bot either by speaking or pressing the keys on their phone (e.g., by saying or entering their account number). In turn, through the use of prerecorded messages and dynamic text-to-speech (TTS), the bot too can respond to the consumer through speech.

Voice bots are a powerful way to engage with your consumers through communication that is effortless and natural to them. They support routing to human agents or other voice bots, and the preservation of the conversation’s context during the transfer. This means a hand-off is “warm.”

Advantages of Voice bots

Advantages to consumers

- Convenience: Let consumers perform tasks hands-free and without the need for physical input. This is particularly useful in situations where consumers might not be able to use their hands. Driving. Cooking. Anywhere.

- Efficiency: Save consumers time and effort. Voice bots are designed to quickly and accurately perform tasks, such as answering questions or scheduling an appointment.

- Personalization: Create and offer more personalized experiences. Voice bots are designed to learn from consumer interactions and tailor their responses accordingly.

- Integration: Tie into third-party systems easily. Check the status of an order. Refill a prescription. Make an appointment. And more.

- Accessibility: Allow people with disabilities to interact with technology in a more natural and accessible way.

Advantages to your brand

- Reduce your overall operating costs by reducing the number of human agent seats that you need, through the increased use of bots with high containment.

- Drive efficiency by letting voice bots take care of simple, repetitive tasks.

- Reduce contact center telephony costs by minimizing the number of telco minutes that you use.

- Increase customer loyalty and lifetime value by improving customer satisfaction (CSAT). You can realize higher CSAT because your consumers can get things done quickly, in their own time. They don’t need to wait for agents to become available during business hours, nor do they need to wait on hold in agent queues.

Using Voice bots and Messaging bots makes possible a rich, rewarding experience on any voice or digital communication channel. The consumer can ask a question, resolve a problem, or make a purchase using any device in any setting: driving a car, in a meeting, running errands, or relaxing on the couch.

Language support

Try the tutorial

Learn by doing. Try the Voicebot tutorial.

Before you begin

Watch the video

Check out our 3-minute video for bot builders.

Complete preliminary setup

To get started, there are some preliminary steps you must complete:

- Activate the Voice Bots feature.

- Port or claim a phone number.

- Configure call recording and other settings.

- Configure voice settings and audio cues.

Key concepts

Voice bots and messaging bots work similarly

To be sure, voice bots have some special functionality that’s particular to the Voice channel. But overall you build voice bots and messaging bots in LivePerson Conversation Builder in the same way.

Moreover, many aspects of the bot behave similarly, such as:

- Intents and patterns

- Variables and slots

- Scripting code in interactions

- Agent connectors

- And more

Unique considerations for voice bots are called out in this article where appropriate.

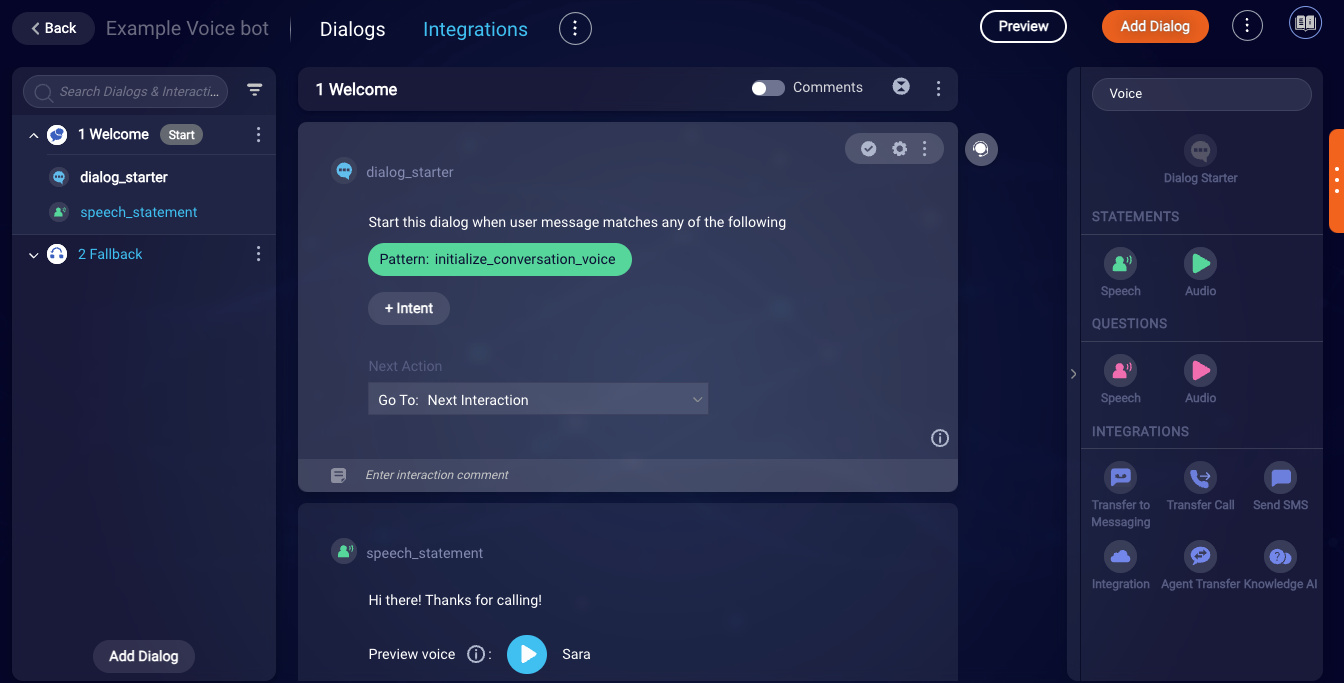

How voice conversations begin

In the Voice channel, it’s the consumer that first starts things off by making a call. The call is to a specific number that you previously claimed and set up for use, tying the number to a skill in Conversational Cloud. When the consumer makes a call to the number, if the number’s assigned skill matches the Voice bot’s assigned skill, the Voice bot answers the call.

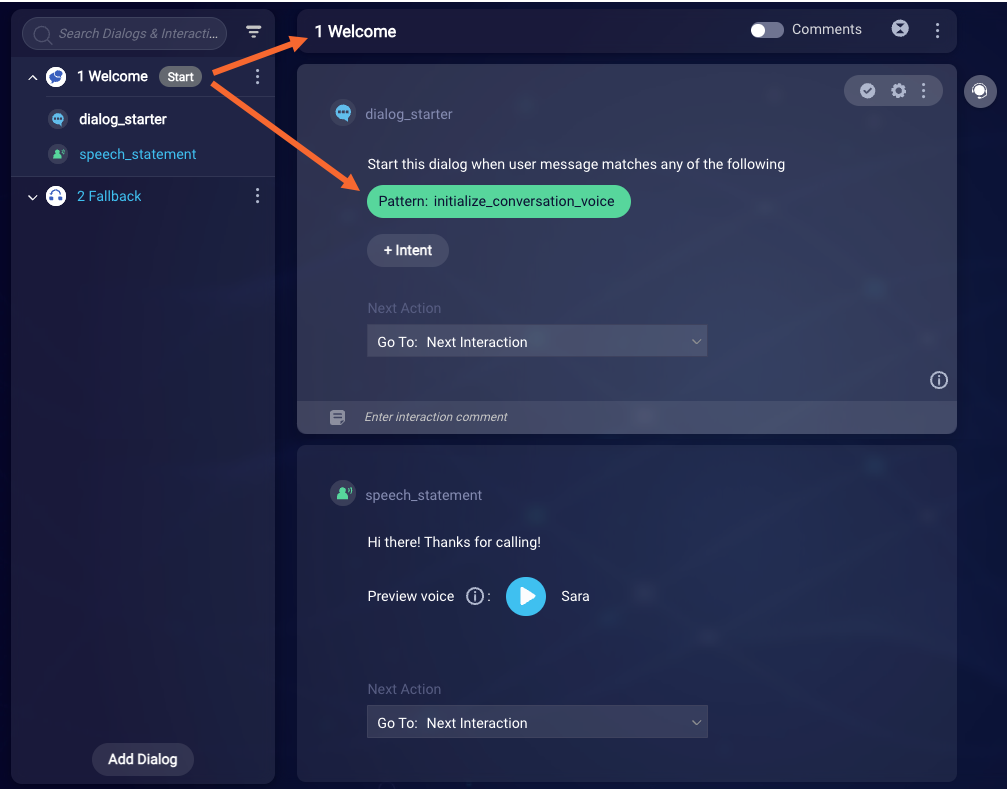

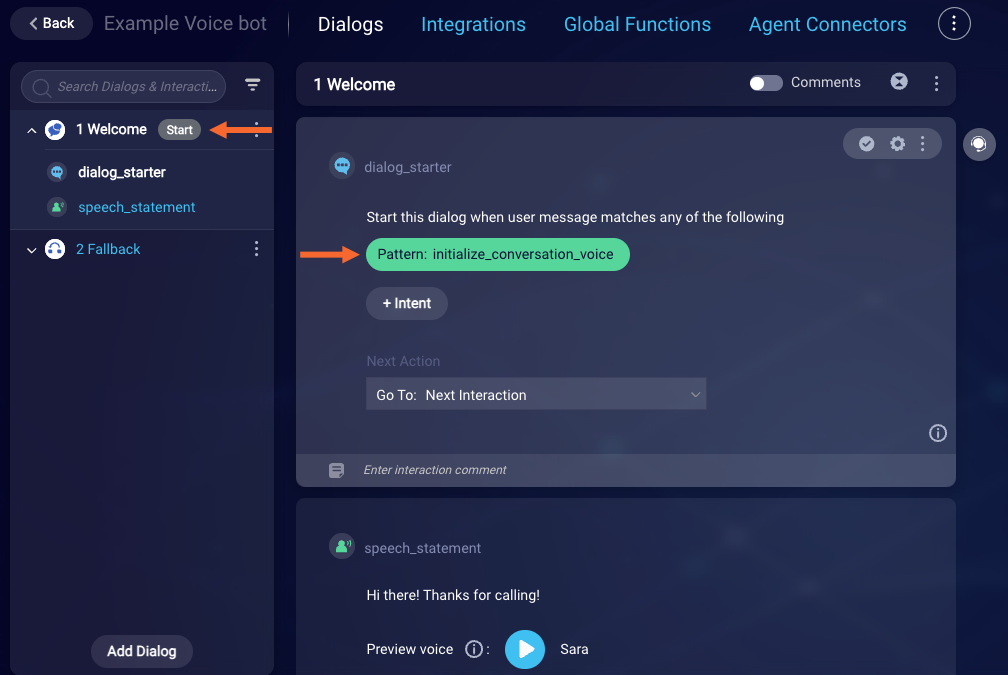

Once the Voice bot answers the call, the bot needs to start off the conversation. The consumer called, so it’s the bot that must speak first. There are two configuration elements that control this:

-

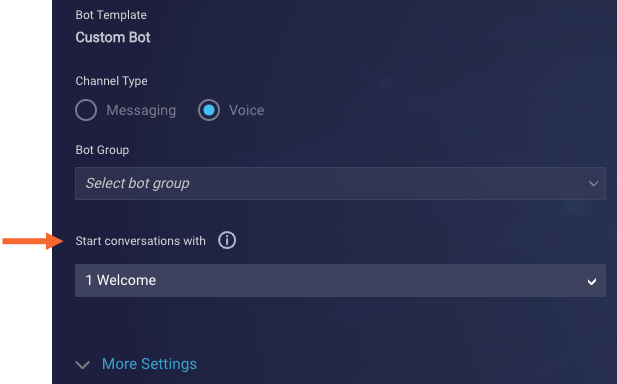

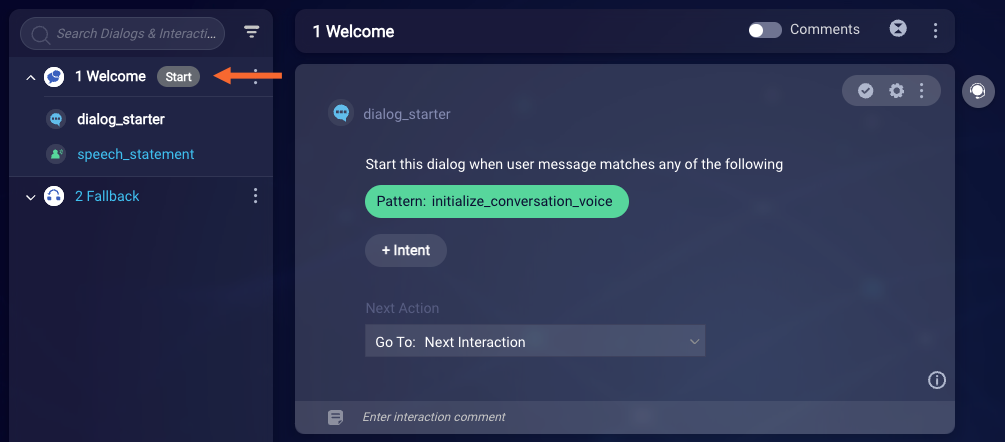

In Bot Settings, the Start conversations with setting identifies the dialog to trigger when the conversation is started. In the UI, this dialog is given a Start badge.

-

In the dialog starter of the dialog selected in the Start conversations with bot setting, the pattern

initialize_conversation_voicemust exist. This isn't some type of response from the caller. Rather, after the call is set up (i.e., all underlying system resources are prepared) and the conversation is ready to be started, the system automatically sends this pattern to the bot so that the bot starts the conversation.

The combination of these 2 configuration elements yields the best experience for starting the conversation. They ensure that the conversation starts at the right time, and that the consumer hears the bot’s first message.

By default, in any Voice bot that you create, the Welcome dialog that’s provided out-of-the-box is designated as the Start dialog. So, its dialog starter matches the initialize_conversation_voice pattern.

You can change the dialog that starts off the conversation. Just remember to change both of these configuration elements:

- The value of the Start conversation with bot setting

- The location of the

initialize_conversation_voicepattern match

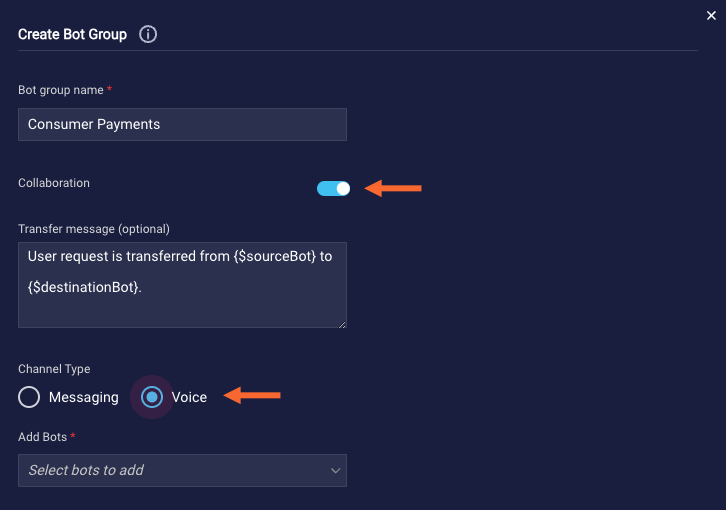

Bot-to-bot collaboration

You might be planning to build multiple Voice bots for handling different consumer requests, to make things easier to scale and maintain. If this is the case, put all your Voice bots into a bot group that’s specifically designated for the Voice channel and supports collaboration.

With your Voice bots within an exclusive, collaborative group, you can take advantage of automatic transfers within the group, since all the bots within the group support speech.

A collaborative conversation might begin like this:

Welcome bot: Hi there! Thanks for calling. What’s your name?

Consumer: John

Welcome bot: Hi John, how can I help you?

Consumer: I’d like to pay my bill over the phone.

Payment bot: Sure, John, I can help you with that.

Payment bot: What’s your account number?

In the conversation above, the Welcome bot starts things off and asks the consumer for their intent. But the Welcome bot can’t handle the consumer’s request, so the conversation is automatically transferred to the Payment bot in the group, which can handle the request. When the transfer is performed, the intent is automatically passed to the Payment bot. With the intent available, the Payment bot immediately begins its dialog flow for bill payment. Notably, the Payment bot’s Welcome dialog is never triggered. What’s more, other contextual information like the consumer’s name can also be passed along (via the Conversation Context Service), so the hand-off is “warm.” This too is illustrated above.

Currently, transfers between Voice bots and Messaging bots aren’t supported. For this reason, the UI doesn’t allow you to mix Voice and Messaging bots within the same bot group at this time.

Best practices

Start the conversation off right

The bot’s first message to the consumer should be short. The bot should introduce itself, tell the consumer what it can do, and ask the consumer why they’re calling: “Hi, I’m Jonn, your brand's assistant. I can help you locate a store, tell you our hours, or check on the status of an order. Say ‘help’ at any point to learn more about what I can do for you.”

If the bot can do many things, you might want to mention just the top two to four, like in our example above. You could then add a “help” or “information” intent for handling the rest and fielding questions like, “What else can you do?”

Keep it human-like

At LivePerson, we believe that consumers can engage with Conversational AI in ways that make strong connections, instilling not just confidence but delight too. Strive for a voice bot that’s smart and, well, human-like. Keep the voice bot’s language colloquial and its responses empathetic.

Keep it short

On the phone, the consumer is a captive audience, and they can quickly become frustrated if they have to listen to long messages. Overall, refine all messages so that everything said by the bot is as brief as possible.

Make the most of contextual info

Consider the scenario where the consumer calls, is identified immediately, and their recent order history is retrieved. The bot might jump right in with, “Hi Jane, are you checking on the phone charger you ordered?”

The above scenario can save the consumer time and effort, as there’s no need for them to supply the order number. It can also promote confidence in interacting with the bot.

In general, you can keep things brief and relevant for the consumer by retrieving, capturing, and making the most of contextual information.

Use yes/no questions

Consumers know well how to respond to yes/no questions, so take advantage of that in the conversation flow. “Can I help you with {specific task}?” is easier to answer than, “What can I do for you?” Similarly, “Are you ready to check out?” is easier than, “What else can I get for you?”

Make the most of questions that handle yes/no or confirm/reject options.

Enumerate choices as needed

On the phone, it can be tedious for the consumer to hear all the available choices or options for a question. This is especially true if it’s the case for every question. In every phone call.

Consider shortening things up where appropriate. For example, the bot might inform the consumer up front that they can say “options” at any time to hear the list of options or choices for a question. And thus, a question like, “What size pizza would you like?” doesn’t have to always enumerate the choices.

Here’s an example scenario:

- Early in the call, the bot informs the consumer that they can say “Options” at any point to activate a list of options or choices for a given question.

- The consumer triggers the Order Pizza intent.

- The bot responds with, “What size would you like?” (A “Pizza size” interaction)

- The consumer might not know the sizes available or how to communicate their desired size (small, medium, or large? 12-inch, 14-inch, or 16-inch? And so on).

- The consumer says “Options” or “I don’t know what I can choose from” to trigger the “Options” intent.

- This triggers a dialog that includes an interaction that lists the options for the “Pizza size” interaction and asks the consumer what size they want.

- The consumer responds with a valid selection.

- The bot flow continues as normal.

If in Step 5 the consumer instead responds with an invalid option, remind them about how to activate the “Options” intent, for example, “I”m sorry. We don’t have that size. If you need to know your choices, just say, ‘Options.’”

Overall, provide a conversational flow to handle options and choices efficiently, and your repeat consumers will be thrilled.

Repeat questions when prompted

In Messaging conversations, the consumer is always able to look at the messaging window to refer back to a question that was asked by the bot. This isn’t the case with voice conversations. Once the question is asked, it’s past.

The trouble with this is that in Voice conversations, a range of factors can affect the consumer’s ability to understand or hear a question. A loud noise. Connectivity issues. Being interrupted by another person. Simply forgetting the question. All of these things can happen in a conversation.

Build into your voice bot the ability to repeat a question as needed. The solution here is very similar to that for enumerating choices as needed (see the section above). For example, you might create a “Repeat question” intent to signal to the bot that it needs to ask the question again. Once the question has been answered by the consumer, the bot should resume its flow as normal.

Validate consumer responses and handle errors

By nature, Messaging conversations tend to be more focused and specific than Voice conversations. Rarely does a consumer type into the messaging window something that is out of context.

However, in a voice conversation, there’s a lot more room for misunderstanding by the bot. People are prone to speak very quickly, often say “filler” words (“uh”, “hm”, etc.), often say things that are out of context, other noises and voices are detected, and more. As a result, the response that’s understood by the bot to be the answer to a question might not be what was intended by the consumer.

To ensure things stay on track, always incorporate into the bot design logic that validates consumer response, e.g., “Okay, the last four letters of your last name are S, M, I, T. Is that correct?” And handle errors gracefully, e.g., “We heard {this}, but that doesn’t seem quite right. Could you please repeat your answer?” These practices can help to ensure the conversation doesn’t go awry.

Start quickly with a bot template

To get you up and running quickly, LivePerson offers a set of predefined, industry-specific bot templates too. These can enable rapid adoption of automation. You can find more info on available bot templates in this section.

Build a bot using LivePerson's Voice bot capability

To build Voice bots, you use LivePerson’s Conversation Builder application. This lets you use the same, familiar application that you use to build your Messaging bots.

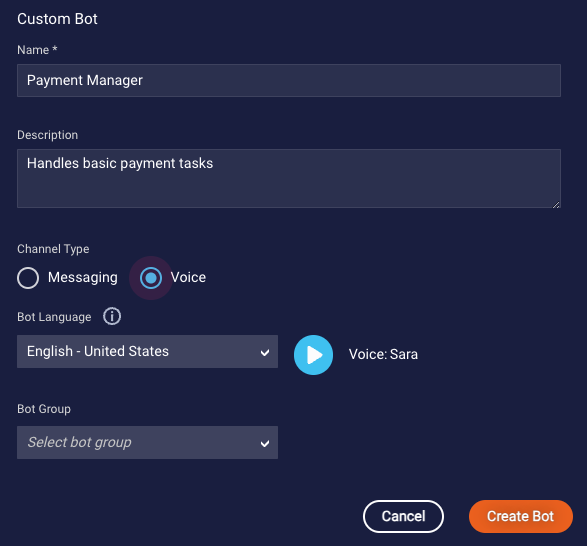

Create the bot

- Access Conversation Builder.

- In the upper-right corner, click New Bot.

- In the Choose a Bot Template window, select Custom Bot.

-

Under Custom Bot, enter the bot’s information.

Note the following:

- Channel Type: Select Voice.

-

Bot Language: Select a variant of the language depending on the accent that you want. (Learn about supported languages.)

You can change the bot's voice and configure other voice behaviors in Voice Settings. However, the bot's language can't be changed after the bot is created.

- Bot Group: You can’t have both Voice and Messaging bots in the same bot group, so here you can only select from the list of bot groups that you’ve created specifically for Voice. If desired, you can skip this setting and add the bot to a group later.

- Click Create.

- Build out the bot per your requirements.

Build out the bot

Once you create the Voice bot, the dialog editor is opened, so you can begin to build out the bot. By default, a custom Voice bot is created with two dialogs:

-

Welcome dialog: Starts the conversation properly by matching the

initialize_conversation_voicepattern. Learn how voice conversations begin. - Fallback dialog: Provides a simple fallback response for when the bot doesn’t understand the consumer.

In the Dialog Editor, the interaction tool palette on the right displays only the interactions that are permitted for the Voice channel.

Configure bot settings

Learn how to configure bot settings.

Deploy the bot

See the discussion on deployment later in this article.

Interactions for Voice bots

There are several interactions that are specific to Voice bots. These include:

- Speech statement

- Audio statement

- Speech question

- Audio question

- Transfer Call integration

- Send SMS integration

- Transfer to Messaging integration

That said, you can also use some interactions that are common to both messaging bots and voice bots. Use the channel dropdown in the interaction palette to understand which interactions are supported in the Voice channel.

Bot & voice settings

Important considerations

Human-like experiences via SSML

The consumer’s experience with your voice bot should feel, well, human-like. For example, you want the bot to be able to move from cheerful to empathetic when appropriate, to pause, to emphasize words, to pronounce words a certain way, and so on. To achieve this goal, you can add Speech Synthesis Markup Language (SSML) to the interactions in the bot. You can use any of the tags described on this Microsoft SSML page, which might vary slightly from the W3C specification.

If a message in an interaction in the bot contains SSML, the bot's voice settings are ignored. So be sure to fully specify the desired voice characteristics using SSML.

Also see Microsoft’s user-friendly reference on SSML and its testing tool.

Pre-recorded audio

While a text-to-speech (TTS) solution can be easier to build and maintain in the long run, use of pre-recorded audio is sometimes useful. You might have info that is already available in recorded form, or the info might be best conveyed using content that is not exclusively speech. Pre-recorded audio might include greetings, menu options, disclosures, announcements, and more. The audio files must be hosted by you.

What’s more, the domain for the pre-recorded audio files must be whitelisted in our system before the audio files can be used. Contact your LivePerson representative to assist with this.

Language detection

At the start of an automated voice conversation, you often want to detect the consumer’s language and use that to drive the bot flow. Consider a conversation like this one:

Bot 1: How can I help you today?

Consumer: ¿Hablas español?

Bot 2: ¿Cómo puedo ayudarte?

Consumer: ¡Excelente! Estoy llamando porque quiero una velocidad de Internet más rápida.

In the flow above, English-language Bot 1 detects the language of the consumer’s first message and immediately transfers the conversation to Spanish-language Bot 2.

You can power this behavior using the Language Detected condition match type. This condition match type triggers the specified Next Action when the consumer input matches the language and locale that you specify in the rule.

The Language Detected condition match type isn’t available by default. Contact your LivePerson representative to enable this feature. Also be aware that it’s only available in Voice bots.

In our example conversation above, we directed the flow to a dialog in English-language Bot 1 that performs a manual transfer to Spanish-language bot Bot 2 using an Agent Transfer interaction.

Looking to support a flow like this? "Press 1 for English or stay on the line. Press 2 for…" This kind of language-based "stay on the line" scenario can be achieved using 1) rules that use the Language Detected condition match type, and 2) the Time out and proceed if no response setting in the interaction's Advanced settings. Learn about this setting in the section that follows.

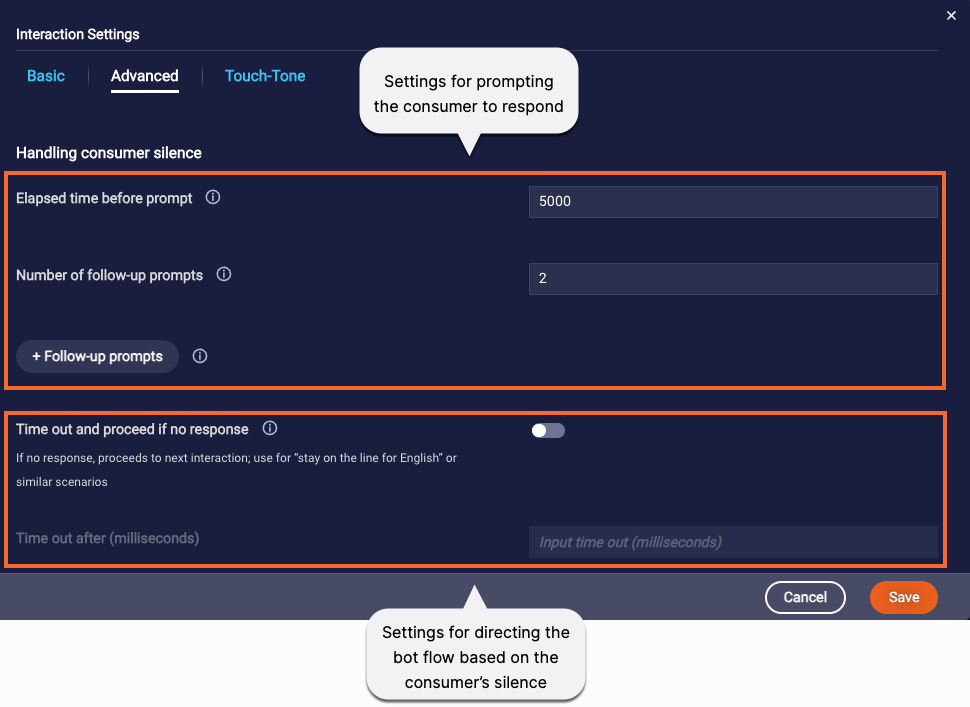

Consumer silence

Consumer silence can be handled in one of three ways:

- Prompt the consumer to respond to the question. This is a best practice for getting the consumer back on track in the conversation.

- Wait for a response for a specified amount of time. If none is received, proceed to the next interaction. This approach is often used when you want to take the consumer's silence as direction for how to direct the bot flow, for example, "press 1 or stay on the line for English," "press 1 or stay on the line for Sales," etc.

- Wait for a response for 2 minutes. If none is received, close the call.

Configure the applicable settings per your requirements.

Settings for "Prompt to consumer"

- Elapsed time before prompt: After sending the question, this is the number of milliseconds to wait before prompting the consumer to respond.

- Number of follow-up prompts: Select the number of follow-up prompts to send to the consumer if they don’t answer the question. If you select “1” or higher, the system sends the prompts you define. If you don’t define prompts, the system uses the question as the prompt. To not send follow-up prompts, select “0” (zero).

- Follow-up prompts: Enter the messages to send to the consumer when they don’t respond to the question. Prompts typically start with, “Sorry, I didn’t catch that,” “Let’s try again,” and then rephrase the original question. You can define fewer prompts than you send. For instance, if you choose to send three prompts but only define two, the first is resent as the third prompt. (Prompts are selected in circular fashion.) You can also use botContext and environment variables, and you can apply SSML.

If there’s still no response from the consumer after the follow-up prompts are sent, the conversation is closed after waiting 2 more minutes.

Settings for "Time out and proceed"

- Time out after (milliseconds): Enter the number of milliseconds to wait for a response before proceeding to the next interaction.

It isn't possible to configure handling of consumer silence at the bot level. This is done at the interaction level.

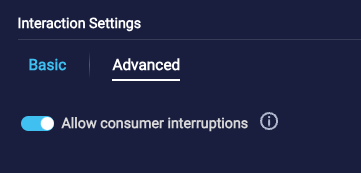

Consumer interruptions

Often, you want to allow the consumer to interrupt the Voice bot while it’s playing a message. A good example of when you might want this is a lengthy prompt where the bot is providing detailed instructions on how to input info: “…Please enter two digits for the month, two digits for the day, and four digits for the year.”

By default, all statement (Speech, Audio) and question (Speech, Audio) interactions allow the consumer to interrupt the Voice bot. When the consumer does this by saying something or entering/tapping a value, the Voice bot stops playing its message and immediately processes and responds to the consumer’s message.

There’s a nuance here to be aware of: Assume you have a statement followed by a question. If the consumer interrupts the statement, the consumer’s message is evaluated against the question and processed accordingly. As a result, if the message is an expected answer to the question, the question is skipped (i.e., it's not sent).

Background noise

It’s important to note that when you allow consumer interruptions, background noise (roadway noise, music playing in the background, etc.) doesn’t interrupt the conversation. The system automatically disregards such noises. However, voices in the background or echoes of voices (which can occur if you have multiple speaker devices near your phone) might interrupt the conversation.

Preventing consumer interruptions

In both statements and questions, you can enable or disable this ability using the Allow consumer interruptions setting.

Turn off the ability to interrupt for messages that should be heard in full, such as legal notices. When the setting is disabled, if the consumer interrupts the Voice bot while it’s playing the message, the consumer’s input—whether speech or touch-tone (DTMF)—is simply discarded.

It’s not possible to configure handling of consumer interruptions at the bot level. This is done at the interaction level.

Context switching

Like in Messaging bots, context switching is enabled by default in Voice bots. If context switching isn’t desirable for your use case, you can disable it at the conversation’s start, or at a specific point.

Fallback

Fallback works like it does for Messaging bots.

As a best practice, always include a Fallback dialog. You can customize it per your requirements. For example, if the Voice bot doesn't understand the consumer's message, you might want to restart the consumer at the beginning of the conversation, return to a specific IVR menu, transfer to a human agent at a third-party voice contact center, etc.

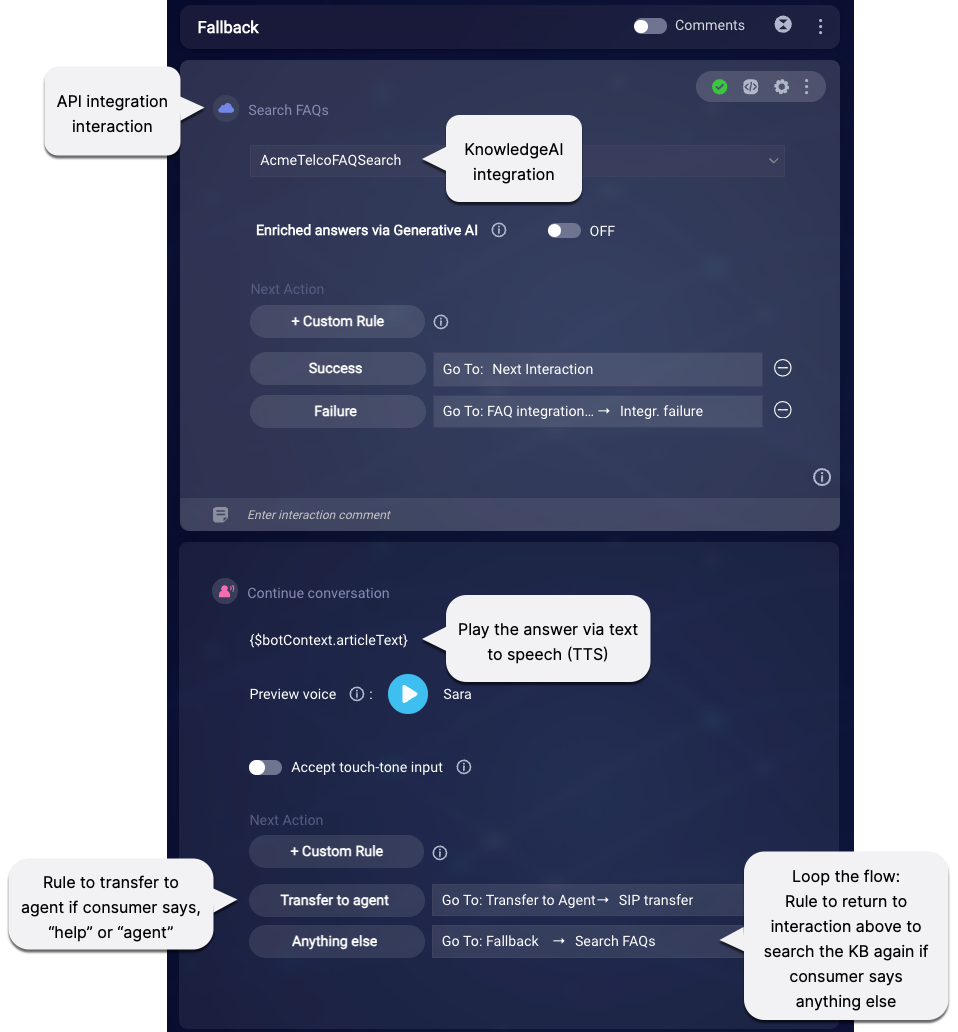

KnowledgeAI integrations in Voice bots

Here’s a common approach to answering questions in a voice bot:

For the best consumer experience:

- Keep your article content as short as possible. (Internal knowledge bases have character limits.)

- Keep your URLs as short as possible too. They should take the form of “www.mysite.com,” i.e., without the “http://” that doesn’t convey well over the Voice channel.

In a Speech statement or question in the voice bot, you can wrap the article's text in SSML, though we haven't done this in the image above. However, you can't add SSML directly to the article's text within KnowledgeAI™; this isn't supported.

Automated answers enriched via Generative AI

You can automate LLM-powered answers that are smarter, warmer, and better. We call these enriched answers.

Transfers

Transfer to another Voice bot

Take advantage of automatic transfers by putting the Voice bot in a bot group with other Voice bots.

Or, perform a manual transfer to another Voice bot using the Agent Transfer interaction.

If you add an Agent Transfer interaction to a Voice bot after creating and starting the bot's agent connector, you must restart the existing agent connector before testing the change.

Manual transfer to a human agent

To transfer the conversation to a human agent in a third-party contact center, use the Transfer Call interaction. Importantly, by making use of the Conversation Context Service, you can pass along the conversation’s context (e.g., consumer name and other relevant information) when you transfer the conversation.

The Transfer Call integration lets the bot make a SIP call to the contact center over a SIP trunk, or make a call to the center's E.164 number over the global public switched telephone network (PSTN).

Automatic transfer to a human agent

Repeated use of the Fallback dialog in a conversation can put the consumer in a frustrating loop, so LivePerson recommends that you include an Auto Escalation dialog in your Voice bot. Use it to “free” the consumer from the loop and transfer the call to a human agent in a third-party voice contact center.

The Auto Escalation dialog works like it does for Messaging bots. The only difference is that the default configuration is a little different. For example, it includes a Transfer Call interaction (which is for Voice), not an Agent Transfer interaction (which is for Messaging).

If you add an Auto Escalation dialog, you must fully configure it as per your requirements.

Transfer to a Messaging channel

Use the Transfer to Messaging interaction.

Integrating with external systems

The Integration interaction is available. You might need to use it in a Voice bot to make a programmatic call for some reason, for example, to retrieve or post data to an external system or to perform some action.

Additionally, use of the Web View Integration API is also supported for integrations with external systems (e.g., for integrating with your payment system of choice for processing payments).

Scripting functions

Currently, there aren’t any scripting functions that are specifically designed for use with Voice bots. That said, you can use many of the existing scripting functions in a Voice bot, as many are still applicable (e.g., sendMessage).

Closing conversations

Voice conversations are closed when one of the following happens:

- The consumer hangs up.

- The consumer loses the signal, such that the call is dropped from their side.

- The conversation is closed as a next action. That is, in the dialog, the Next action is “Close Conversation.”

Testing

-

As you design and build the Voice bot, preview the conversational experience. During this phase, you can debug errors by examining the bot’s logs using Bot Logs.

-

Before moving the Voice bot to Production, deploy it and perform end-to-end testing in the Voice channel itself. Verify the bot performs as expected using actual phone calls to a phone number that you’ve claimed. Consider claiming a phone number to use exclusively for testing purposes.

You can also test any deployed Voice bot using the Conversation Tester.

Deployment

After you build the Voice bot and preview its conversation flow, deploy it, so you can perform end-to-end testing. Generally speaking, you deploy a Voice bot in the same way that you deploy a Messaging bot, although there are some configuration steps that differ.

- Create the skill. (You should have already done this when you claimed a phone number, which includes assigning the skill to the number.)

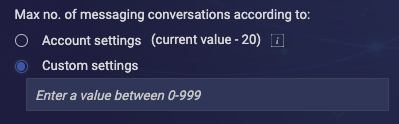

-

Create the bot user. Make sure to:

- Assign it the skill you created in step 1.

- Assign it to the “Agent” profile.

- Set the Max no. of messaging conversations to the number of calls that you want the Voice bot to handle simultaneously. Select Custom settings, and enter the number.

For example, if you expect that the bot needs to handle 200 calls simultaneously, set this slightly higher, i.e., to 250. If you’re unsure of the number that you need, use 999. To scale higher than 999, you’ll need to add multiple agent connectors for the bot.

-

In the bot, add an agent connector and assign it the bot user you created in step 2.

Don’t add the custom configuration fields for resolving stuck conversations. These only work for Messaging bots.

In the case of a Voice bot, when the consumer makes a call to a phone number, if the phone number’s assigned skill matches the Voice bot’s assigned skill, the Voice bot answers the call.

Analytics & reporting

Measuring success

Use the Bot Analytics application to evaluate the performance and effectiveness of your Voice bots. All of the insightful analytics that are available for Messaging bots are also available for Voice bots. Notably, this also means that every closed conversation with a Voice bot gets a Meaningful Automated Conversation Score or MACS. Check the overall MACS for the Voice bot to quickly understand overall conversation quality. Then dive into the MACS details to learn where opportunities for tuning might exist.

Within Bot Analytics, a few key terms have slightly different definitions for Voice bots:

- Transfers: Previously called "Escalations." This metric is the total number of conversations intentionally or unintentionally (auto escalated) transferred to a human agent. For Voice bots, this metric includes SIP transfers and E.164 transfers to agents using the Voice channel. This metric doesn't include transfers to a Messaging channel.

- Conversations: This metric reflects the total number of calls in which the Voice bot participated. Includes closed and open conversations; excludes those performed in Preview.

Reviewing conversation transcripts

You can review text transcripts of closed Voice bot conversations within Bot Analytics.

Additionally, text transcripts and audio recordings are available in real-time and post-call within the Agent Workspace in Conversational Cloud.

Personally identifiable info (PII) and payment card industry (PCI) info can be redacted from post-call transcripts and recordings. Contact your LivePerson representative to set this up.

If you’re recording calls and interested in performing post-call analysis of those recordings, talk to your LivePerson representative about our Voicebase product.

Troubleshooting

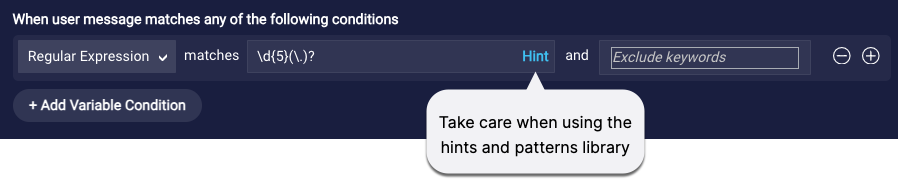

Consumer utterances aren’t being recognized

Speech-to-text processing sometimes adds punctuation to consumer utterances automatically. For example, if the consumer says their zip code is “98011,” the Voice bot might understand this as either:

- 98011

- 98011.

Take note of the period in the second value above.

Similarly, if the consumer says, “Yes,” the Voice bot might understand this as either:

- Yes

- Yes.

As a result, in your custom rules that validate consumer input, remember to always check for both possibilities: with and without the period. In our case of the zip code above, we would need a custom Regular Expression that checks for a 5-digit number with an optional period character:

\d{5}(\.)?

Additionally, use caution when using the hints and pattern library that are available in the UI. You’ll need to customize the values based on the info above.

FAQs

Can I connect a third-party voice bot?

Yes, if you have a third-party bot that’s been designed to work in the Voice channel, you can integrate it.

Can I convert a Messaging bot to a Voice bot?

No, Messaging bots and Voice bots are fundamentally different in nature; for example, each supports a different set of interaction types. For this reason, you can’t export a Messaging bot from Conversation Builder and import it back in as a Voice bot. You’ll need to manually create the Voice bot.

You can export one Voice bot and re-import it as a second Voice bot.

Can I change the default voice that’s used by the Voice bot?

Yes, you can do this in the bot's Voice Settings.

How can I let the consumer know that the bot is thinking or at work performing an action?

Currently, there’s no way to do this, but stay tuned for enhancements in this area.

When the consumer is responding to a question, how does the bot know when the consumer is finished speaking?

The system uses continuous automatic speech recognition (ASR) to “collect” the consumer’s response over several seconds. After this time has elapsed, the system considers the consumer to be finished speaking.

When the consumer is responding to a question, what happens if the consumer pauses while speaking?

If the consumer says something, pauses, and then says something else, what is received by the Voice bot depends on how long the consumer’s pause is. If it’s longer than 500 milliseconds, the messages might be received separately. If it’s shorter than 500 milliseconds, the two messages might be concatenated and received as a single message.

What happens if the consumer never responds to a question?

If the consumer never responds to a question, even after a number of follow-up prompts, the conversation is closed. Learn more.

What happens if the consumer says two intents, such as, “I want to get my account balance and order a new credit card?”

Currently, the bot will detect the highest scoring intent that’s detected in the consumer’s message and begin to handle the intent as designed. As a best practice, design into your bot flow a question like, “Is there anything else I can help you with?”

What does the bot receive when the consumer says something like, “one oh one” or “one double zero?”

This kind of language that’s often used by consumers is supported and understood properly. For example, “one oh one” is received as 101, and “one double zero” is received as 100.

What happens if the bot doesn’t understand the consumer?

When the Voice bot doesn’t understand the consumer’s response, a fallback response is triggered (unless you’ve set up a No Match rule). If the response was to a question, the question is then repeated.

Repeated use of a fallback response can put the consumer in a frustrating loop. We recommend that you include an Auto Escalation dialog to “free” the consumer from this loop and transfer the call to a human agent in a third-party voice contact center.

Can I initiate an outbound call to a consumer, where that call is routed to a bot?

No, not at this time.

I want to use the Send SMS interaction. If I have an SMS messaging program, can replies go to a skill?

No, not at this time.

Is this Voice feature and architecture GDPR-compliant?

Yes, it's compliant with the General Data Protection Regulation (GDPR) for the European Union. Learn more.

Unsupported features

Currently, the following features aren’t supported in Conversation Builder Voice bots:

- Import of third-party Messaging bots into Conversation Builder as Voice bots

- Small Talk feature: Instead, create a dialog to handle these via intent matching. Alternatively, automate answers enriched via Generative AI, and specify a No Article Match prompt.

- Disambiguation dialogs

- Configuration of the Voice bot’s agent connector to resolve stuck conversations

- Transfers

- Transfer from one Voice bot to another Voice bot that you explicitly implement via a LivePerson Agent Escalation integration However, such transfers are supported if implemented via the Agent Transfer interaction.

- Transfer to a Messaging bot

- Post-conversation surveys over the Voice channel: However, integrations within Voice bots are supported, so we suggest you send an SMS message to the consumer’s phone to initiate a survey over Messaging.

Stay tuned for forthcoming enhancements!