KnowledgeAI versus Generative AI

KnowledgeAI's architecture is built upon robust algorithms and data-driven methodologies that ensure relevant answers to consumer queries. Generative AI isn't used when retrieving answers from your knowledge bases.

That said—if you choose—you can use the power of Generative AI to enrich the retrieved answers using the conversation's context. The resulting responses are grounded in knowledge base content, contextually aware, and natural sounding. Answer enrichment is optional.

Knowledge bases

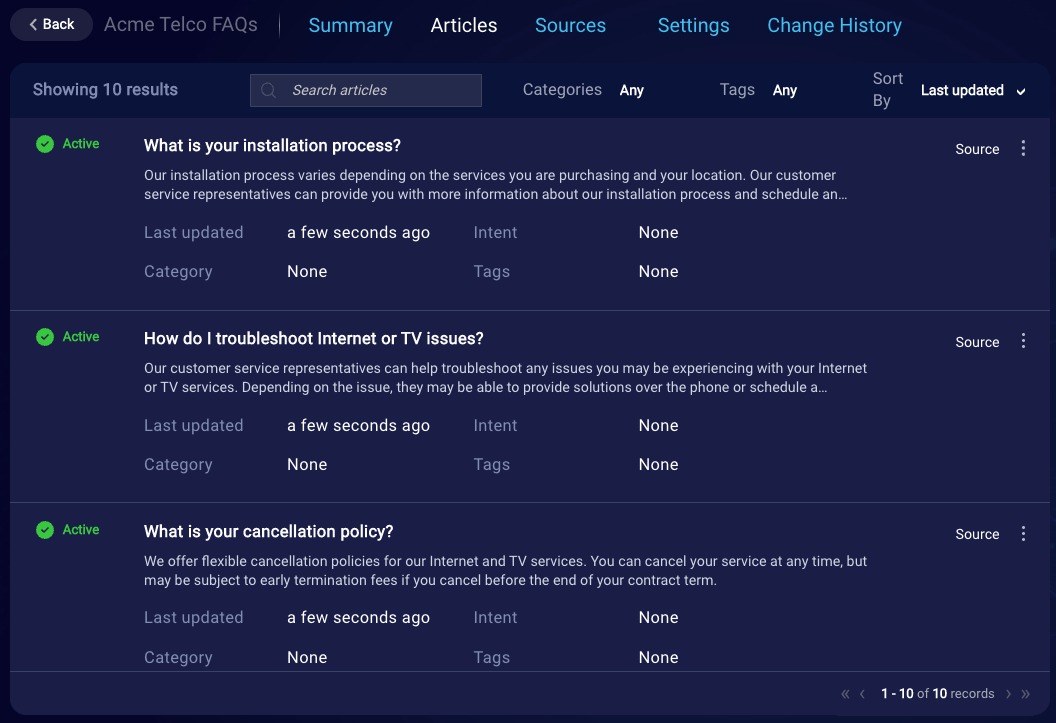

A knowledge base exposes a repository of articles that support a particular classification in your business. As an example, the following is an illustration of an internal knowledge base, which contains telco FAQs.

When used in a bot, a knowledge base is a great tool to answer questions about a variety of topics specific to the bot's area of expertise. Typically, in LivePerson Conversation Builder, you might add a knowledge base integration in a Fallback dialog to provide simple answers to topics not covered elsewhere in the bot. Alternatively, you might have an FAQ bot that is driven by a knowledge base full of articles. Powering bots with intelligent answers can increase containment: It helps to ensure that the conversation stays between the bot and the consumer and that the consumer's need is resolved by the bot.

In the KnowledgeAI application, you add and manage knowledge bases. The knowledge bases either contain articles, or they integrate with an external content source that contains them.

Learn about knowledge base best practices.

Articles

An article is a focused piece of content (a message) on a single topic that you want to serve to consumers.

Learn about article best practices.

Content sources

You can create knowledge bases using a variety of content sources:

- Knowledge management system (KMS) or content management system (CMS)

- CSV file

- Google sheet

You can also start from scratch and author articles directly in a knowledge base.

KMS or CMS

LivePerson recommends this approach because it's not just flexible and powerful, but also automatic. The other approaches to content management are manual.

If you have a knowledge management system (KMS) or content management system (CMS) with well-curated content that you want to leverage in bot conversations, you can integrate it with KnowledgeAI. Integrating with your KMS/CMS lets your content creators use familiar tools and workflows to author and manage the content.

You can integrate with any KMS/CMS that has a public API for retrieving knowledge articles. Notable examples include Salesforce and Zendesk.

When you create an internal knowledge base of this type, you use LivePerson's Integration Hub (iHub) to integrate the KMS/CMS. iHub embeds Workato and uses Workato to set up the integration.

CSV files

If your tool of choice is a simple CSV sheet, you can add an internal knowledge base and import the contents of the CSV file when you do.

Google sheets

If your tool of choice is a simple Google sheet, you can add an internal knowledge base and link the sheet to it.

Starting from scratch

If you’re starting a knowledge base from scratch, and you prefer to work directly in the KnowledgeAI application, you can also do this.

Content management

As mentioned above, in KnowledgeAI, you can populate a knowledge base in several ways. Here's an at-a-glance view of how that works:

| Method | Are articles enabled by default? |

|---|---|

| Integrate with KMS/CMS | Yes |

| Import CSV | Yes |

| Import Google sheet | Yes |

| Manual creation within KnowledgeAI | No |

What you can’t do is mix content types within a knowledge base. For example, if you add a knowledge base and import 2 PDFs into it, you’ll see a Sources page which you can use for adding more PDFs…and only PDFs.

User query contextualization

Before performing a knowledge base search for answers, you can pass the user’s query—along with some conversation context—to a LivePerson small language model so that the model can enhance (rephrase) the query. This can significantly improve the relevancy and accuracy of the retrieved answers. Query contextualization is optional.

Learn more about query contextualization.

See where query contextualization fits into the overall search flow.

Answer retrieval

There are two different techniques that you can use to retrieve answers from your knowledge bases:

- AI Search: This is KnowledgeAI's powerful, one-size-fits-all search method based on the latest research in deep learning. It gets to the intent, is context-aware and phrasing-agnostic, and requires no setup.

- Intent-based search: This search method makes use of Natural Language Understanding or NLU to find relevant answers. This method does require an investment in setup.

As stated earlier in this article, Generative AI isn't used during answer retrieval.

You can use either, or both, of the methods above.

Learn more about these search methods, including the overall search flow.

Answer enrichment

You can use the power of Generative AI to enrich retrieved answers using the conversation's context. The resulting responses are grounded in knowledge base content, contextually aware, and natural sounding. Answer enrichment is optional.

Learn more about answer enrichment.

See where answer enrichment fits into the overall search flow.

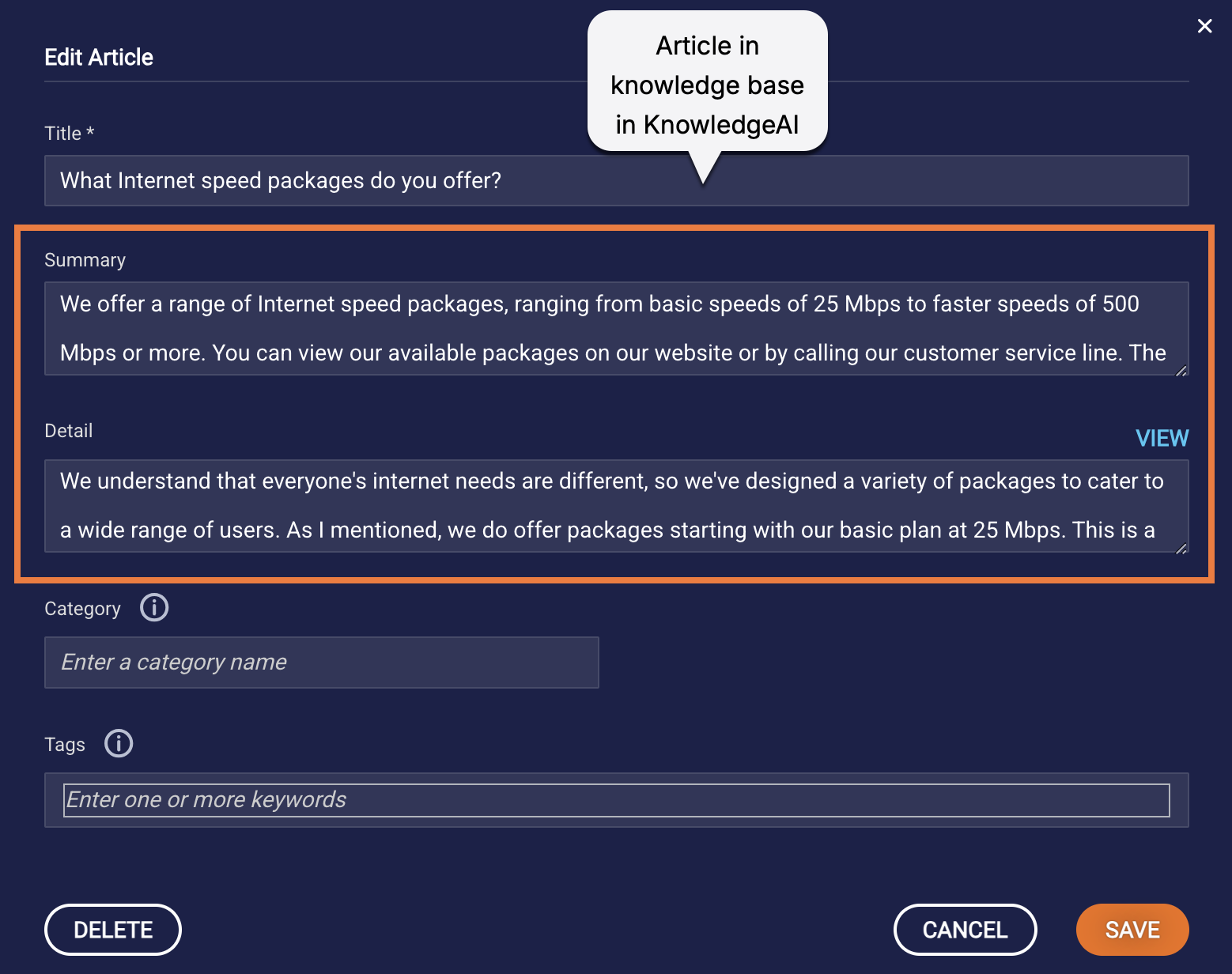

Summary versus Detail

Every article has a Summary field and a Detail field.

Summary stores a brief response to send to the consumer. Detail stores a longer, more detailed response to send to the consumer. (Learn about their character limits.)

There are a few important concepts to understand about Summary and Detail:

- Summary is a required field, but Detail is optional.

- Follow our best practices when entering content into these fields.

- Both fields (among others) are used when searching the knowledge base to retrieve matched articles. (Learn about search methods.)

- If you’re using Generative AI to enrich answers, the system sends the content in Detail to the LLM for enrichment. This is intentional. It ensures that as much info as possible is available to the LLM so that it can generate an enriched answer of good quality. The LLM excels at taking a large amount of info and using it to generate a concise response. So, while Detail is optional, it can play an important role. Importantly, if there’s no Detail in the article, then the content in Summary is sent to the LLM for enrichment instead. (Learn about the answer enrichment flow.) Regardless of which content is sent to the LLM for enrichment, the enriched response is returned to the calling application in the Summary field of the top matched article.

- The content (Summary, Detail, or enriched response) that's sent to the consumer or agent varies based on the use case:

| Application | Using Generative AI? | What's sent to the consumer? | Notes |

|---|---|---|---|

| Conversation Builder bot with a KnowledgeAI interaction | No | Summary | This is true for both the rich and plain “auto render” answer layouts. If you want to send Detail to the consumer, don’t use auto rendering; use a custom layout instead. |

| Conversation Builder bot with a KnowledgeAI interaction | Yes | Enriched answer generated by LLM | |

| Conversation Assist | No | Detail | If Detail is empty, Summary is used instead in the answer recommendation (for the agent to send to the consumer). |

| Conversation Assist | Yes | Enriched answer generated by LLM | Conversation Assist uses this in the answer recommendation (for the agent to send to the consumer). |

More about Detail

When using the Detail field in a Text interaction in a Conversation Builder bot, very long pieces of text are split into multiple messages (after 1,000 characters) when sent to the consumer, and in rare cases the messages can be sent in the wrong order.

If you need to use a long piece of text, you can use the breakWithDelay tag to force the break at a specific point. Alternatively, you can override the behavior to break the text using the setAllowMaxTextResponse scripting function.