For a flexible architecture and an optimal consumer experience, follow these best practices.

Language of content

For optimal performance, add content in only one language to a knowledge base. Don’t create a mixed-language knowledge base.

This is especially true in the case of English content, so you can use the underlying English-language embedding model. The multi-lingual embedding model performs very well, but the English-language embedding model performs even better.

Structuring and organizing content

- Design a modular approach, where each knowledge base supports a particular classification in your business: Create knowledge bases per category, likewise split the intents into domains based on category, and add multiple knowledge base integrations for use in bots. A modular approach like this makes it easier to use a knowledge base for a specific purpose in a bot. Moreover, it yields a faster response during the conversation.

- Provide broad coverage within the knowledge base. The more diverse the content is, the more likely it is that the consumer’s query will be matched to an article.

Adding articles

For functional details, see the discussion on how to add an article. Also, learn about article-level limits.

Article coverage

Strive for one topic per article. Split long articles that cover multiple topics into separate articles that each cover a single topic.

Title

At a minimum, enter a complete sentence or question, for example:

I can't remember my password.Do we have a company org chart?How do I renew my car registration?

Since the title is used in an AI Search (which is a semantic vector search), we recommend that you expand the title to include different ways of expressing the same query, using different synonyms. For example: How do I renew my car registration? / How can I update the registration for my motor vehicle? / What's the way to re-register my car? / I need help with my car tag renewal

The closer the title is to the consumer's potential question, the better that the search works.

Summary and Detail

- Summary: Keep this as brief as possible. We recommend that it's no longer than 120 words. (Learn about limits.)

- Detail: If all of the necessary info on the topic fits into the Summary, use that instead, and keep this section empty. If you use this section, keep the content as brief as possible while also ensuring it contains exhaustive info on the topic. It's advisable to format the content in a structured and easy-to-read format. Avoid using questions in this section, especially questions that duplicate the title.

Learn the basic concepts about Summary and Detail.

Tags

Tags are used by AI Search. To increase the accuracy of knowledge base search results, add tags.

Categories

Categories are used by AI Search. Take advantage of categories.

Categories also make it easier to find articles within the knowledge base when you're in KnowledgeAI™.

Use only alphanumeric and underscore characters in the category name; only these are permitted.

Considering the number of articles

When using AI Search to find and serve answers, the more articles that exist in one knowledge base, the better that the search performs. That said, keep in mind the article limit, and take care to avoid redundant articles.

When using only intent matching (NLU) to find and serve answers, follow these guidelines:

- A good guideline is 75-100 articles in a knowledge base. Keep in mind that every article requires some level of training if you’re going to use NLU.

- If you have a knowledge base that exceeds 75-100 articles, consider splitting the knowledge base into smaller ones based on category, likewise splitting the intents into domains based on category, and adding multiple knowledge base integrations. Then have the NLU match the consumer’s question to the category-based intent and search the applicable knowledge base. This yields a faster response during the conversation.

Raising the quality of answers

There are several best practices you can follow to raise the quality of answers:

Article creation

If you created the articles by importing content, always check for import errors and substantively review the articles that were created.

Article length

Evaluate whether long articles can be broken into smaller ones.

Confidence thresholds

KnowledgeAI™ integrations within LivePerson Conversation Builder bots (Knowledge AI interaction, KnowledgeAI integration) and the settings within Conversation Assist both allow you to specify a “threshold” that matched articles must meet to be returned as results. We recommend a threshold of “GOOD” or better for best performance.

Article matching

The actual KnowledgeAI search for relevant answers (matched articles) in your knowledge base is an important part of any KnowledgeAI integration.

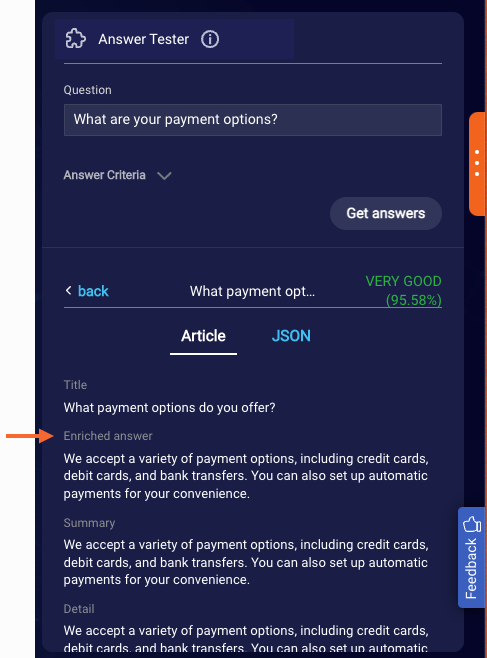

Before you get too far with your use case (Conversation Assist, Conversation Builder bot, etc.), use the Answer Tester tool to test the article matching. This can help to ensure you get the performance you expect.

Here below, we're testing the article matching when using enriched answers.

If, during testing, you find there’s an article that isn’t returned by AI Search as the top answer, associate an intent with the article.

Integrating with a KMS or CMS

Take stock of the content in the KMS/CMS to assess its readiness for Conversational AI.

In general, ensure messages are short and focused. Sending content that’s too long or complex can create a poor consumer experience. For the best experience, consider the following:

- Make sure that all required info (title, summary, etc.) is as brief as possible.

- If you require more than a brief answer, use a content URL, so the consumer can be directed to an external location for more information.

- Use rich content, such as images.

- Consider the channels you are serving when preparing your content. For example, SMS messaging can only support very simple textual content.

Keep in mind that, while knowledge bases do support URLs for video and audio, Video and Audio statements currently aren’t supported by Conversational Cloud. For this reason, they can’t be added to dialogs in Conversation Builder. As an alternative for video, you can use a Text statement that includes a video URL as a link.

Using answers enriched via Generative AI

Prompts

In the context of Large Language Models (LLMs), the prompt is the text or input that is given to the model in order to receive generated text as output. Prompts can be a single sentence, a paragraph, or even a series of instructions or examples.

The LLM uses the provided prompt to understand the context and generate a coherent and relevant response. The quality and relevance of the generated response depend on the clarity of the instructions and how well the prompt conveys your intent. What's more, the style of the prompt impacts the degree of risk for hallucinations.

For best performance, ensure your prompts follow our best practices for writing prompts. Also see our guidance on migrating to GPT-4o mini.

Hallucinations

Using enriched answers created via Generative AI? Concerned about hallucinations? Consider turning on enriched answers in bots that aren’t consumer-facing first (e.g., in a support bot for your internal field team), or in Conversation Assist first. An internal bot is still an automated experience, but it’s safer because the conversations are with your internal employees. Conversation Assist is a bit more consumer-facing, but it has an intermediary safety measure, namely, your agents. They can review the quality of the enriched answers and edit them if necessary, before sending them to consumers. Once you’re satisfied with the results in these areas, you can add support in consumer-facing bots.

More Generative AI best practices

See the relevant section for your use case:

- Best practices for usage in Conversation Assist

- Best practices for usage in Conversation Builder bots

Using LivePerson NLU

If your internal knowledge base uses Knowledge Base intents, which is a legacy feature, behind the scenes the LivePerson (Legacy) engine is used for intent matching. For better performance and a more scalable solution, LivePerson recommends that you convert from Knowledge Base intents to Domain intents as soon as possible. This allows you to associate a domain that uses the LivePerson engine (or a third-party engine). There are many benefits of LivePerson over LivePerson (Legacy).

The above said, you can also use our powerful AI Search instead of Natural Language Understanding. It's ready out of the box. No setup required. No intents required. Learn about search methods.

Limits

To promote best practices, limits are enforced for the number of articles, the length of fields, and so on.