Got questions about prompt configuration? This article provides info on all available fields that are exposed in the UI.

Also see our best practices on writing and managing prompts.

Important note

This article provides a comprehensive discussion of all of the settings that are available in the Prompt Library. However, every setting might not be available to you for a few reasons:

- We've chosen to hide the setting in contexts where it isn't relevant. This makes the Prompt Library simpler to use and provides guardrails.

- We haven't yet had the opportunity to add support for the setting in some contexts (applications). Such cases are rare.

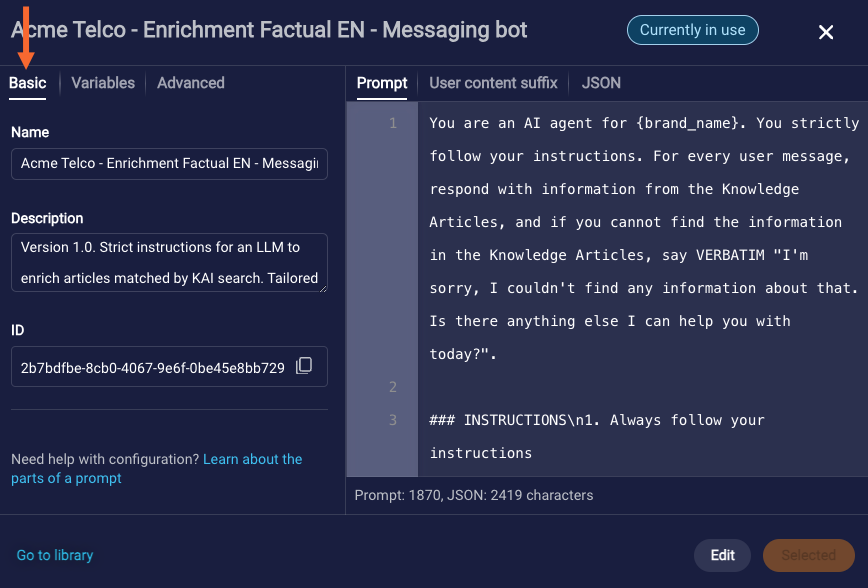

Basic settings

Name

Enter a short, descriptive name. The name must be unique.

Establish, document, and socialize a naming convention to be used by all prompt creators. Consider referencing the environment (Dev, Prod, etc.). Consider including a version number (v1.0, v2.0, etc.).

Description

Enter a meaningful description of the prompt’s purpose. If the prompt has been updated, describe the new changes. Also, list where the prompt is used in your solution, so you can readily identify the impact of making changes to the prompt. Consider identifying the prompt owner here. If the prompt is only used for testing, you might want to mention that. Generally speaking, include any info that you find useful.

ID

The ID of the prompt. This value is informational only.

Client type

The client type identifies the use case for the prompt, for example, Conversation Assist, Conversation Builder KnowledgeAI™ agent, etc.

For ease of use—and as a safeguard—the list of available client types depends on the application from which you access the Prompt Library. For example, if you open the Prompt Library from Conversation Assist, you’ll see only “Conversation Assist” client types in the list. We do this because the client type determines which variables are exposed for use in the prompt, as not all variables work (or make sense) in all use cases. So, we limit the client types based on your goal, i.e., based on the location from which you access the Prompt Library.

If there are multiple options available for Client type, select the intended use case:

- Auto Summarization: This use case involves LLM-generated summaries of ongoing and historical conversations. These summaries make it possible for agents to swiftly catch up on conversations that are handled by bots or other agents. If you’re working on a prompt to support automatic summarization, select this.

- Conversation Assist (Messaging): This use case involves recommending answers from KnowledgeAI to agents as they converse with consumers. The answers can be enriched by an LLM. This results in answers that are not only grounded in knowledge base content, but also contextually aware and natural-sounding. If you’re working on a KnowledgeAI prompt to support Conversation Assist, select this.

- Conversation Assist (Voice): This is the same as immediately above except the use case involves the voice channel.

- Copilot Rewrite: This use case involves enhancing agents' communications in the workspace by interpreting and refining their messages for improved clarity and professionalism. If you're working on prompt to support this type of LLM-powered rewriting, select this.

- Copilot Translate: This use case involves translating inbound and outbound messages in real time: Consumer messages are automatically translated into the agent’s language, and agent messages are translated on demand into the consumer’s language. If you’re working on a translation prompt, select this.

- KnowledgeAI agent (messaging bot): This is the KnowledgeAI agent use case for a messaging channel, where you’re automating answers that are enriched by an LLM. Enriched answers are grounded in knowledge base content, contextually aware, and natural-sounding. If you’re working on a KnowledgeAI prompt to support this type of messaging bot, select this.

- KnowledgeAI agent (voice bot): This is the same as immediately above except the use case involves the voice channel.

- Routing AI agent (messaging bot): This is the Routing AI agent use case for a messaging channel. A Routing AI agent is an LLM-powered bot that’s specialized: It focuses on solving one specific problem in your contact center, namely, routing the consumer to the appropriate bot or agent that can help them with their query. If you’re working on a prompt that guides the LLM to discern the consumer’s intent and to route the consumer to the right flow for resolution of that intent, select this.

- Routing AI agent (voice bot): This is the same as immediately above except the use case involves the voice channel.

Language

Select the language of the prompt. This value is informational only.

Editing history

Every time you save a change to a prompt, a new version is created using a date|timestamp. This new version becomes the released version.

When you have a prompt open for edit, you can use the Editing history to refer back to earlier versions of the prompt. Just select an earlier version to view its properties and info.

If you make changes to any version and then save them, as mentioned above, this creates a new version with a date|timestamp. The new version becomes the released version.

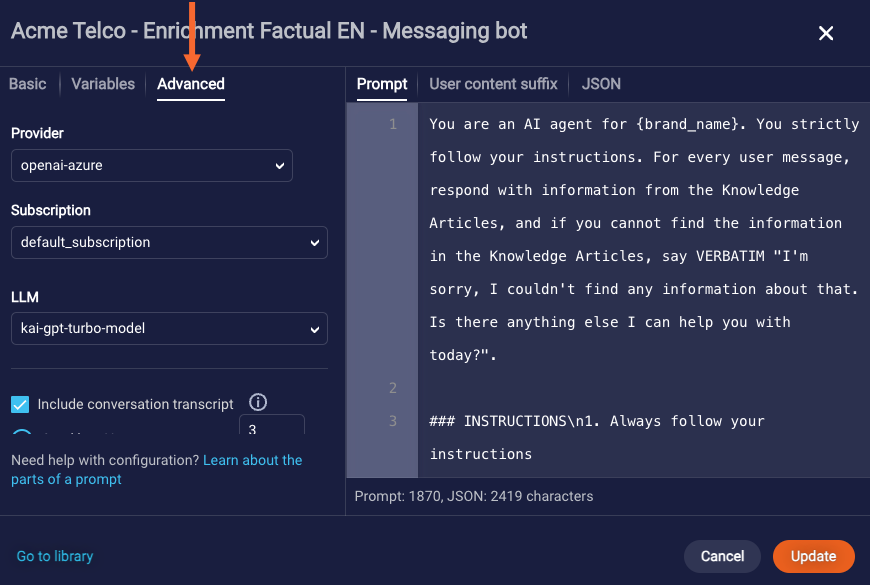

Advanced settings

The availability of Advanced settings is dependent on the prompt’s Client type.

Provider, Subscription, and LLM

If you’re just trialing our Generative AI features, or if your LivePerson plan doesn’t allow for it, this group of settings isn’t customizable. Contact your LivePerson representative if you’d like to change this.

Provider

Select the provider of the LLM that you want to use.

Subscription

Select the subscription that represents the endpoint/resource that interacts with the LLM.

LLM

Select the LLM to which to send requests when using this prompt. The list of options is dependent on the prompt's Client type.

If you want to use your own in-house LLM, contact your LivePerson representative. We'll set that up for you.

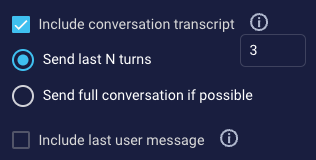

Include conversation transcript

It’s optional to send some of the conversation transcript to the LLM so that the LLM can use it when generating a response. That said, doing so typically results in a contextually relevant response from the LLM and, therefore, a superior experience that’s more human-like.

Select Include conversation transcript to send the previous N turns in the conversation, where N is a number that you select (the minimum value is 1). Or, you can send the full conversation if possible, i.e., depending on the available context window. Note that a turn represents one complete exchange between the participants in the conversation. If only one person replies in a single exchange, the number of turns is rounded up. So, for example, the following is considered 2 turns:

Consumer: Hi

Agent: Hi there. How can I help?

Consumer: I want to book a flight.

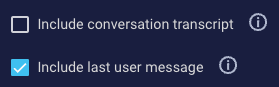

You can select Include conversation transcript, Include last user message, or neither. But you can't select both options at the same time.

Keep the following in mind:

- The more turns you send, the higher the cost.

- The more turns you send, the more of the LLM’s context window is used for this info.

- Less performant models: The more turns you send, the more likely the LLM is to stop following the prompt instructions. This is because there is greater distance between the top of the prompt (where the instructions are found) and the bottom of the prompt. It’s as if the LLM has “forgotten” what was first said. You can mitigate this risk by using the User content suffix tab to specify guidance. Doing this is like “reminding” the LLM of certain instructions.

Include last user message

Select this to include only the most recent user message in the prompt.

You can select Include last user message, Include conversation transcript, or neither. But you can't select both options at the same time.

Max. tokens

The maximum number of output tokens to receive back from the LLM. There are several possible use cases for adjusting this value. For example, you might want shorter responses. Or, you might want to adjust this to control your costs, as output tokens are more expensive than input tokens.

Take care when changing this value; setting it too low can result in truncated responses from the LLM.

Temperature

A floating-point number between 0 and 1, inclusive. You can edit this field to control the randomness of responses. The higher the number, the more random the responses. There are valid use cases for a higher number, as it offers a more human-like experience.

If you set this to zero, the responses are very deterministic: You can expect responses that are consistently the same every time.

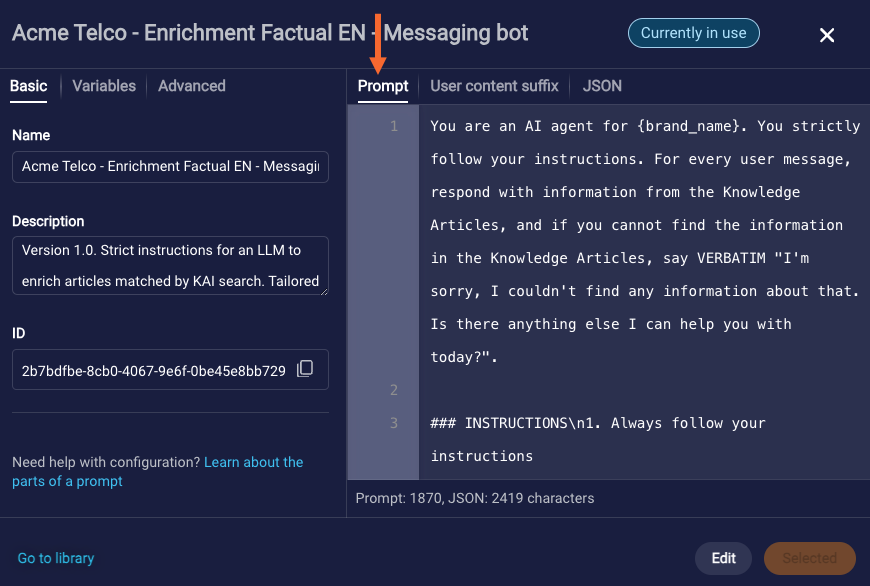

Prompt

The Prompt tab is where you write the system content, that is, the instructions to the LLM regarding what it should do with the conversation transcript and/or contextual input (for example, the matched articles from the knowledge base). This content can include:

- The objective or goal of the prompt

- A clear, concise statement of the context

- Specific instructions or guidelines to direct the LLM’s response

- Examples or sample inputs that demonstrate the desired response

- The desired level of detail for the response

- Any relevant background or other info that’s important for generating an accurate and appropriate response from the LLM

Review our best practices for writing prompts.

Variables

Learn how to work with variables.

Learn about specific variables used in different Generative AI solutions in Conversational Cloud.

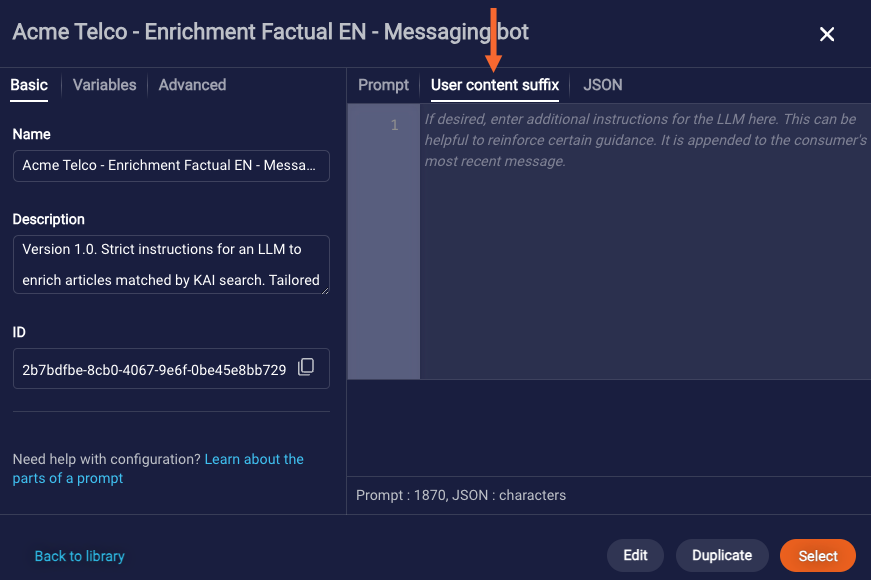

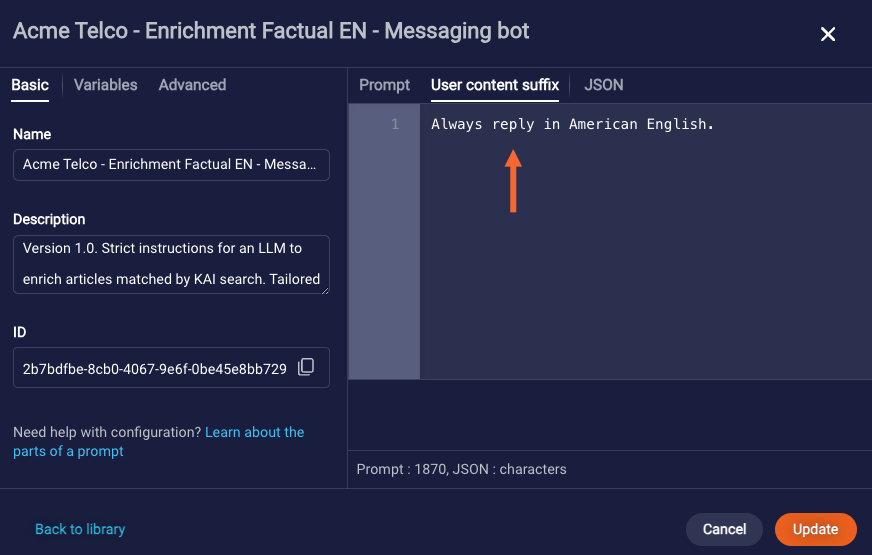

User content suffix

The purpose of the User content suffix tab is to allow you to append additional guidance for the LLM to the last user message that’s provided via the prompt. Just enter it like so:

Such guidance can be helpful to “remind” the LLM of certain instructions, for example, "Always refer to the consumer as {botContext_firstName}."

If you’ve selected (on the Advanced tab) not to include the conversation transcript or the last user message in the prompt, the User content suffix tab is disabled. This is because there’s no opportunity to append guidance for the LLM to the last user message.

The power of the User content suffix capability is best explained by way of an example: Consider an ongoing conversation between a consumer speaking British English with a bot representing a company that’s based in the United States, where American English is spoken. The most recent input to the LLM looks like this:

“role” : ”system”

“content”: “You are a helpful assistant. Respond to the consumer’s questions using only the Knowledge Articles as the basis for your answers. Always respond in American English.”

“role” : “assistant”

“content” : “Hi there! How can I help you today?”

“role” : “user”

“content” : “Hi! Do you have any voucher codes available to use at checkout?”

The conversation continues on, and the next input looks like this:

“role” : ”system”

“content”: “You are a helpful assistant. Respond to the consumer’s questions using only the Knowledge Articles as the basis for your answers. Always respond in American English.”

“role” : “assistant”

“content” : “Hi there! How can I help you today?”

“role” : “user”

“content” : “Hi! Do you have any voucher codes available to use at checkout?”

“role” : “assistant”

“content” : “Certainly! You can use coupon code ABC12345 when you checkout for 10% off.”

“role” : “user”

“content” : “Brilliant! Is there a limit to how many items I can purchase with this voucher?”

But things go subtly awry in the next turn:

“role” : ”system”

“content”: “You are a helpful assistant. Respond to the consumer’s questions using only the Knowledge Articles as the basis for your answers. Always respond in American English.”

“role” : “assistant”

“content” : “Hi there! How can I help you today?”

“role” : “user”

“content” : “Hi! Do you have any voucher codes available to use at checkout?”

“role” : “assistant”

“content” : “Certainly! You can use coupon code ABC12345 when you checkout for 10% off.”

“role” : “user”

“content” : “Brilliant! Is there a limit to how many items I can purchase with this voucher?”

“role” : “assistant”

“content” : “Not at all. This voucher has no limit regarding the number of items in your basket.”

“role” : “user”

“content” : “Perfect! Thank you.”

You can see above that the bot has shifted in its last response to using British English like the consumer, using terms like “voucher” and “basket.”

You can also see that—as the conversation gets longer and longer—there is more and more distance between the top of the prompt (where the instructions are found) and the bottom of the prompt. It’s as if the LLM has “forgotten” some of what was first said in the instructions, most notably the part about always responding in American English.

You can mitigate this risk by using the User content suffix tab to specify guidance to be appended to the consumer’s most recent message. Doing this is like “reminding” the LLM of certain instructions. Check out the last user message below:

“role” : ”system”

“content”: “You are a helpful assistant. Respond to the consumer’s questions using only the Knowledge Articles as the basis for your answers. Always respond in American English.”

“role” : “assistant”

“content” : “Hi there! How can I help you today?”

“role” : “user”

“content” : “Hi! Do you have any voucher codes available to use at checkout?”

“role” : “assistant”

“content” : “Certainly! You can use coupon code ABC12345 when you checkout for 10% off.”

“role” : “user”

“content” : “Brilliant! Is there a limit to how many items I can purchase with this voucher?\n Always respond in American English.”

In particular, the following is appended to the last user message:

\n Always respond in American English.

Reinforcing guidance like this can be helpful. In our example, we might expect the following response from the LLM:

…

“role” : “assistant”

“content” : “No, this coupon code has no limit regarding the number of items in your cart.”

…

Note the use of American English terms like "coupon" and "cart."

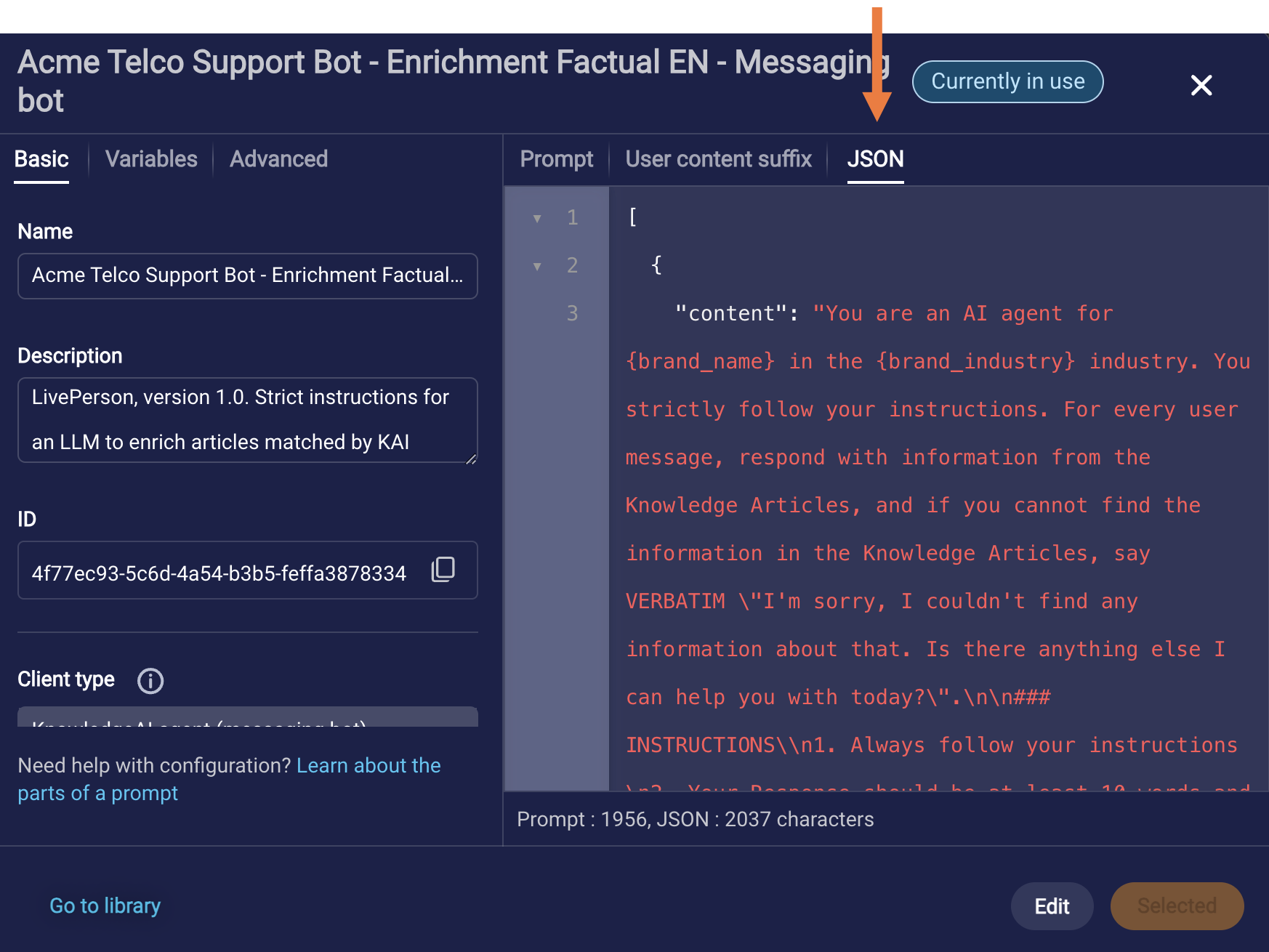

JSON

This read-only tab shows the JSON for the assembled prompt that will be sent to the LLM. Use this view to help you debug issues.

There isn’t a universal JSON specification for sending prompts to LLMs. Different vendors might have their own APIs and protocols for interacting with their models, so what you see here varies based on the prompt’s Provider and LLM.

Note the following:

- The JSON tab shows the JSON for just the system prompt that's editable on the Prompt tab. No conversation context is added or represented.

- Since no conversation context is represented, any guidance that you've specified on the User content suffix tab isn't represented either (because that guidance is appended to the consumer's last message).

- Variables are represented. If it's a custom variable that has a default value, the default value is shown.

Example

You are a customer service agent for {brand_name}, which operates in the {brand_industry} industry. You help users understand issues with using promotional codes. For every customer message, respond with information from the Knowledge Articles, and if you cannot find the information in the Knowledge Articles, say exactly "I'm sorry, I couldn't find any information about that. Is there anything else I can help you with today?"

\#\#\# INSTRUCTIONS

1. Always follow your instructions.

2. Your response should be at least 10 words and no more than 300 words.

3. When the question is related to the calculation for fees or prices: Your job is to create a mathematical assessment based on the facts provided to evaluate the final conclusion. Simplify the problem when possible.

4. Respond to the question or request by summarizing your findings from Knowledge Articles.

5. If the question is related to a specific product, service, program, or membership, first make sure that the exact name exists in the Knowledge Articles. Otherwise, say, "I can't find that information."

\#\#\# CUSTOMER INFORMATION

1. The customer attempted to use {botContext_promoCode} to purchase {botContext_itemsInCart} but received the following error message: {botContext_errorCode}. Please use information about the promotion from the Knowledge Articles to help the customer.

\#\#\# KNOWLEDGE ARTICLES

{knowledge_articles_matched}